As your organization transforms to embrace the wealth of digital information that is becoming available, the capability to store, manage and consume this data in any given format or product becomes an increasing burden for operations.

How does your organization handle the request, “I need to use product Z to store data for my new project?” There are several responses I have experienced first-hand with clients.

- Enforce the company policy that Products O and S are all that can be used.

- Ignore the request.

- Consider the request, but antagonize your own internal organization with long wait times (e.g. months or years) and with repeated delays to evaluate a product you simply do not want to support.

- Do whatever the developers say, they know best.

Unfortunately I have seen too many organizations use the first three options as the answer. The last option you make consider as a non valid answer however I have also seen this prevalent when there is no operations team or strategic technical oversight.

Ignorance of the question only leads to a greater pressure point at a later time. This may be when your executive team now enforces the requirement with their timetable. I have seen this happen and with painful ramifications. With the ability to consume public cloud resources with only access to a credit card, development resources can now proceed unchecked more easily if ignored or delayed. When a successful proof of concept is produced this way and now a more urgent need is required to deploy, support and manage, the opportunity to have a positive impact on the design decision of a new data product has passed.

Using DBaaS is one enabling tool within a strategic business model for your organization to satisfy this question with greater control. This however is not the solution but rather one tool combined with applicable processes. In order to scope the requirements for the original question, your model also needs to consider the following:

- Provisioning capabilities

- Strategic planning and insight

- Support and management

- Release criteria

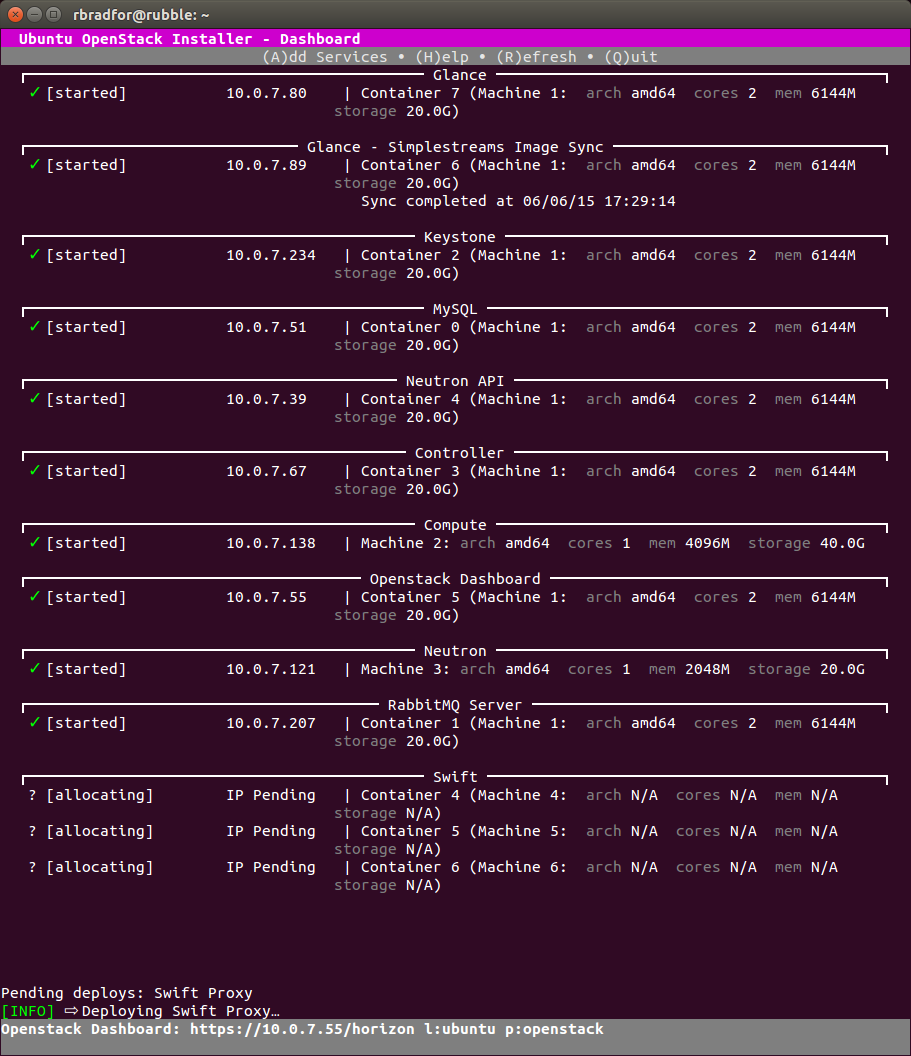

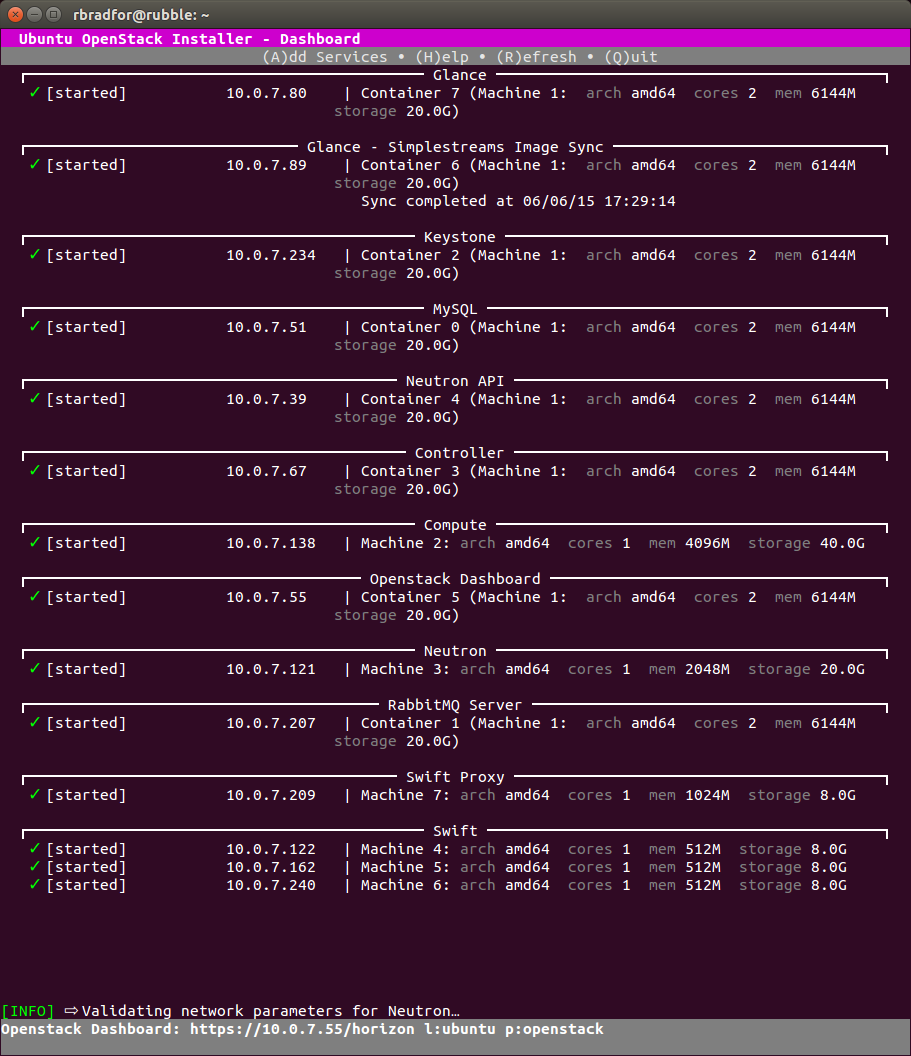

Provisioning

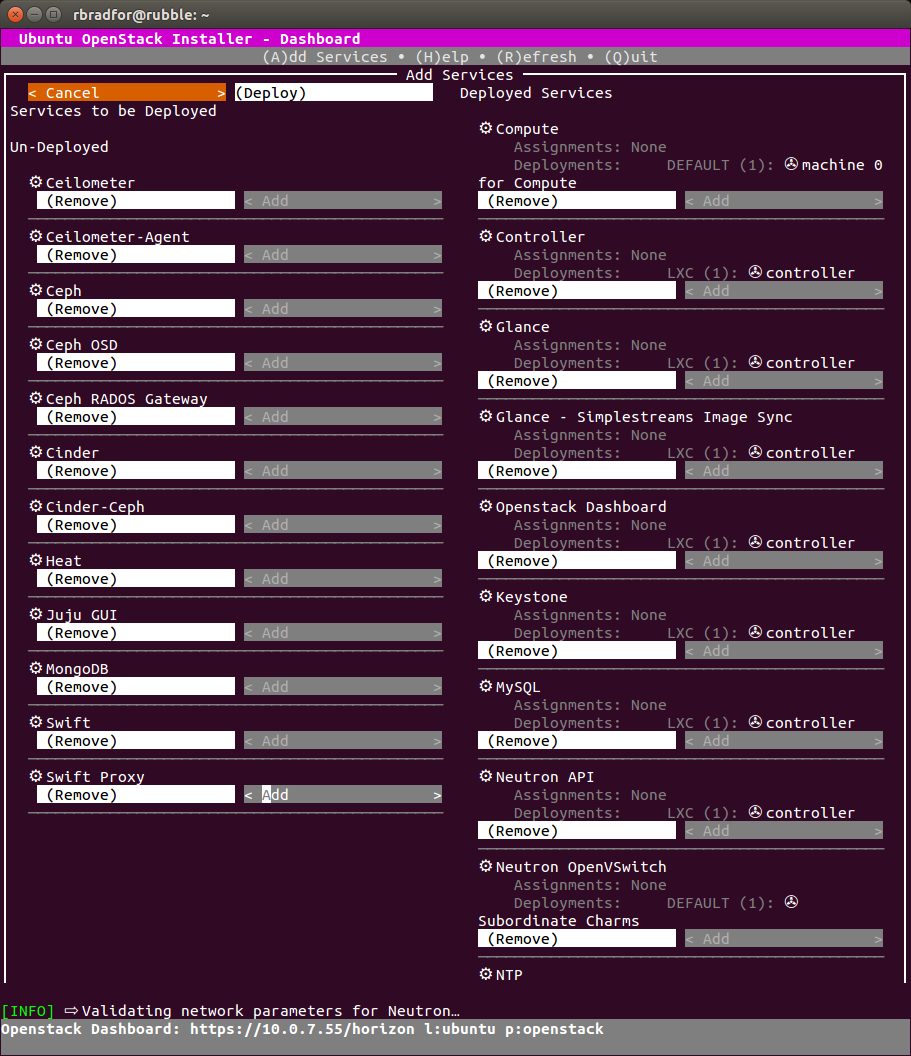

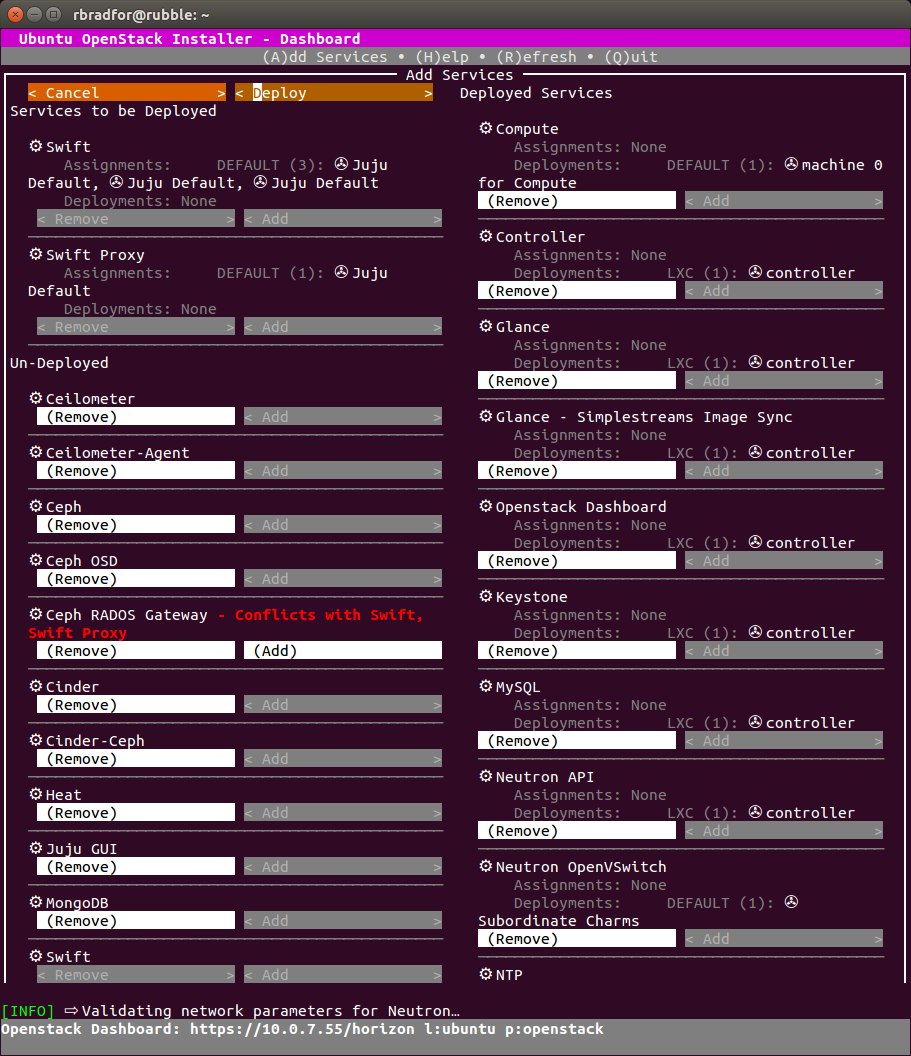

This is the strength of DBaaS. Operations can enable development to independently provision resources and technology with little additional impeding dependency. There is input from operations to enable varying products to be available by self servicing, however there is also some control. DBaaS can be viewed as a controlled and flexible enabler. A specific example is an organization that uses the MySQL relational database, and now a developer wishes to use the MongoDB NoSQL unstructured store. An operator may cringe at the notion of a lack of data consistency, structure data query access or performance capabilities. These are all valid points, however those are discussions at a strategic level discussion your workflow pipeline and should not be an impediment to iterate quickly. Without oversight, to iterate quickly can lead to unmanageable outcomes.

Strategic Planning

There always needs to be oversight combined with applicable strategy. A single developer stating they want to use the new product Z for a distribution key/value store needs to be vetted first within the engineering organization and its own developer peers. If another project is already using Product Y that has the same core data access and features, this burden of an additional product support should be a self contained discussion validating the need first.

This is one strength of a good engineering manager that balances the requirements of the business needs and objectives with the capabilities of the resources available, including staff, tools and technology. Applicable principles put in place should also ensure that some aspect of planning is instilled into the development culture.

Support and Management

The development and engineering resources rarely consider the administration and support required for the suite of products and services used in an organization. The emphasis is on feature development and customer requirements, not the sustainability, longevity and security of any system. Operational support is a long list of needs, just a few include:

- Information security.

- Information availability.

- Service level agreements (SLAs) between partners, service providers and the internal organization

- The backup ecosystem, time taken, consistency, point-in-time recovery, testing and verification, cost of H/W, S/W, licenses.

- Internet connectivity.

- Capacity planning and cost analysis of storing and archiving ever increasing sources of data.

- Hardware and software upgrades.

Two way communication which is often overlooked is the start of better understanding. That is, operations being included and involved in strategic development planning, and engineering resources included in operations needs and requirements for ensuring those new product features operate for the benefit of customers. In summary, “bridging the communication chasm”.

DevOps is an abused term, this implies that developers now perform a subset of responsibilities of Operations. As an individual that has worked in both development teams and lead operations teams, your resources skills, personality, rational thinking and decision making needs are vastly different between an engineering task and a production operations task.

Developers need to live a 24 hour day (with the unnecessary 3am emergency call) in the shoes of an operator. The reverse is also true, however the ramifications to business continuity are not the same. Just one factor, the cost, or more specifically the loss to the business due to a production failure alters the decision making process. Failure can be anything from a hardware or connectivity problem, bad code that was released to a data breach.

Release Criteria

If an organization has a strong (and flexible) policy on release criteria, all parties from the stack-holder, executive, engineering, operations and marketing should be able to contribute to the discussion and decision for a new product, and applicable in-house or third-party support. This discussion is not a pre-requisite for any department or developer to iterate quickly, however it is pre-requisite to migrate from a proof-of-concept prototype to a supported feature. Another often overlooked criteria in the pursuit for rapid deployment of new features which are significantly more difficult to remove after publication.