Not part of my Don’t Assume series, but when a client states “Why is my database slow””, you need to determine if indeed the database is slow.

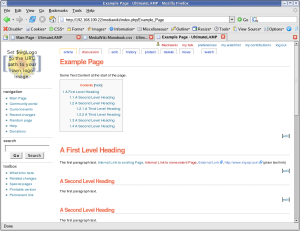

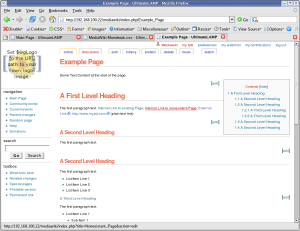

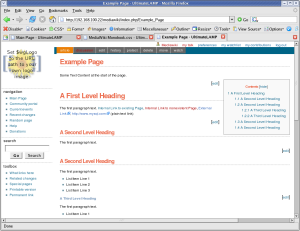

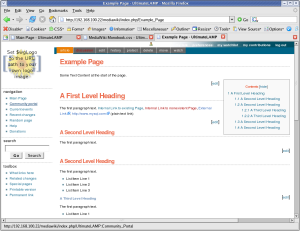

Some simple tools come to the rescue here, one is Firebug. If a web page takes 5 seconds to load, but the .htm file takes 400ms, and the 100+ assets being downloaded from one base url, then is the database actually slow? Tuning the database will only improve the 400ms portion of 5,000ms download.

There some very simple tips here. MySQL is my domain expertise and I will not profess to improving the entire stack however perception is everything to a user and you can often do a lot. Some simple points include:

- Know about blocking assets in your <head> element, e.g. .js files.

- Streamline .js, .css and images to what’s needed. .e.g. download a 100k image only to resize to a thumbnail via style elements.

- Sprites. Like many efficient but simple SQL statements, network overhead is your greatest expense.

- Splitting images to a different domain.

- Splitting images to multiple domains (e.g. 3 via CNAME only needed.) — Hint: Learn about the protocol

- Cookieless domains for static assets

- Lighter web container for static assets (e.g. nginx, lighttpd)

- Know about caching, expires and etags

- Stripping out http://ww.domain.com from all your internal links (that one alone saved 12% of HTML page size for a client). You may ask is that really a big deal, well in a high volume site the sooner you can release the socket on your webserver, the sooner you can start serving a different request.

Like tuning a database, some things work better then others, some require more testing then others, and consultants never tell you all the tricks.

References

As with everything in tuning, do your research and also determine what works in your environment and what doesn’t. Two excellent resources to start with are Steve Souders and Best Practices for Speeding Up Your Web Site by Yahoo.

I joined a new meetup group

I joined a new meetup group