The following are my slides that I presented at ODTUG Kaleidoscope 2010. This presentation talks about the MySQL defaults including a non-transactional state, silent data truncations, date management, and transaction isolation options. These are all critical for data integrity and consistency. I also cover in-depth topics including SQL_MODE, character sets and collations.

Getting Nokogiri working under Mac OS X

The official Installation documentation states:

sudo port install libxml2 libxslt sudo gem install nokogiri

however I found this not to work for me. The following did work.

$ sudo port install libxml2 libxslt $ sudo gem install nokogiri ERROR: could not find nokogiri locally or in a repository $ sudo gem sources -a http://gems.github.com Bulk updating Gem source index for: http://gems.github.com Updating metadata for 1 gems from http://gems.rubyforge.org $ sudo gem install nokogiri ERROR: could not find nokogiri locally or in a repository $ sudo gem update --system Updating RubyGems... Attempting remote update of rubygems-update Successfully installed rubygems-update-1.3.6 1 gem installed Installing ri documentation for rubygems-update-1.3.6... Installing RDoc documentation for rubygems-update-1.3.6... Updating version of RubyGems to 1.3.6 Installing RubyGems 1.3.6 RubyGems 1.3.6 installed $ sudo gem install nokogiri Building native extensions. This could take a while... Successfully installed nokogiri-1.4.2 1 gem installed Installing ri documentation for nokogiri-1.4.2... No definition for parse_memory No definition for parse_file No definition for parse_with No definition for get_options No definition for set_options Installing RDoc documentation for nokogiri-1.4.2... No definition for parse_memory No definition for parse_file No definition for parse_with No definition for get_options No definition for set_options

I’m not sure why I got those additional errors, however I was able to confirm a valid installation with.

$ irb

>> require ‘nokogiri’

=> true

>> quit

Still room at Kaleidoscope for MySQL attendees

Today I received notice that next week’s Velocity conference is at maximum capacity. With just under 2 weeks before the start of ODTUG Kaleidoscope in Washington DC we still have room for late registrations. There is 4 days of MySQL content, free events and also a Sunday Symposium that includes talks on performance and high availability.

Contact any of the MySQL speakers directly and you can receive a special 50% discount code. This is only for MySQL attendees.

If you live in the DC area and only want the FREE option then come along and join use on Monday night for a free session and reception.

ODTUG Kaleidoscope 2010

July 27 – July 1

Marriott Wardman Part Hotel

2660 Woodley Road NW

Washington, District Of Columbia 20008

www.odtugkaleidoscope.com

Conference highlights include

Community Service Day – Saturday, June 26, 8:00 a.m. – 1:00 p.m.

Community Service Day – Saturday, June 26, 8:00 a.m. – 1:00 p.m.

Join ODTUG volunteers and help refurbish a school in D.C. Under the guidance of Greater DC Cares (GDCC), the leading and largest nonprofit coordinator of volunteerism in the D.C. region, ODTUGgers will: Sort books, beautify school grounds, and paint games on blacktop outside of hte school.

There is still time to sign up!

Four Full-day Symposia – Sunday, June 27, 8:30 a.m. – 4:00 p.m.

Application Express; Oracle EPM and Essbase; Security, Scalability, and Performance; SOA and BPM. One-day registration available.

Welcome Reception/Battle of the Rock Bands – Sunday, June 27, 6:15 – 8:00 p.m.

Meet the exhibitors and compete in the “Battle of the Rock Bands.” Sign up to play.

Opening General Session – Monday, June 28, 8:30 – 10:00 a.m.

Awards for Best Technical Paper and Best 2009 Presentations

Keynote – “Future of the Internet and its Social Impact” by Lee Rainie, Director of the PEW Research Center’s Internet & American Life Project.

Sundown Sessions with Oracle ACE Directors – Monday, June 28, 5:45 – 6:45 p.m.

Reception to meet the Oracle ACE Directors immediately follows – 6:45 – 7:45 p.m.

Special Event – Wednesday, June 30, 6:30 – 10:00 p.m.

Special Event – Wednesday, June 30, 6:30 – 10:00 p.m.

Featuring comedian John Heffron, 2nd season champion of the hit TV show, Last Comic Standing.

Music by live cover band, Right Foot Red

MongoDB Experience: Server logging

By default the mongod process sends all output to stdout. You can also specify the daemon to log to file which is necessary for any production implementation. For example:

$ mongod --logpath=`pwd`/mongo.log & all output going to: /home/rbradfor/projects/mongo/mongo.log ^C

As you can see there is still a message to stdout, that should be cleaned up for a GA release. The output you will see for a clean startup/shutdown is:

Fri Jun 11 14:05:29 Mongo DB : starting : pid = 7990 port = 27017 dbpath = /home/rbradfor/projects/mongo/data/cu rrent master = 0 slave = 0 64-bit Fri Jun 11 14:05:29 db version v1.4.3, pdfile version 4.5 Fri Jun 11 14:05:29 git version: 47ffbdfd53f46edeb6ff54bbb734783db7abc8ca Fri Jun 11 14:05:29 sys info: Linux domU-12-31-39-06-79-A1 2.6.21.7-2.ec2.v1.2.fc8xen #1 SMP Fri Nov 20 17:48:28 EST 2009 x86_64 BOOST_LIB_VERSION=1_41 Fri Jun 11 14:05:29 waiting for connections on port 27017 Fri Jun 11 14:05:29 web admin interface listening on port 28017 Fri Jun 11 14:05:31 got kill or ctrl c signal 2 (Interrupt), will terminate after current cmd ends Fri Jun 11 14:05:31 now exiting Fri Jun 11 14:05:31 dbexit: Fri Jun 11 14:05:31 shutdown: going to close listening sockets... Fri Jun 11 14:05:31 going to close listening socket: 5 Fri Jun 11 14:05:31 going to close listening socket: 6 Fri Jun 11 14:05:31 shutdown: going to flush oplog... Fri Jun 11 14:05:31 shutdown: going to close sockets... Fri Jun 11 14:05:31 shutdown: waiting for fs preallocator... Fri Jun 11 14:05:31 shutdown: closing all files... Fri Jun 11 14:05:31 closeAllFiles() finished Fri Jun 11 14:05:31 shutdown: removing fs lock... Fri Jun 11 14:05:31 dbexit: really exiting now

MongoDB logging does not give an option to format the date/time appropriately. The format does not match the syslog of Ubuntu/CentOS

Jun 9 10:05:46 barney kernel: [1025968.983209] SGI XFS with ACLs, security attributes, realtime, large block/in ode numbers, no debug enabled Jun 9 10:05:46 barney kernel: [1025968.984518] SGI XFS Quota Management subsystem Jun 9 10:05:46 barney kernel: [1025968.990183] JFS: nTxBlock = 8192, nTxLock = 65536 Jun 9 10:05:46 barney kernel: [1025969.007624] NTFS driver 2.1.29 [Flags: R/O MODULE]. Jun 9 10:05:46 barney kernel: [1025969.020995] QNX4 filesystem 0.2.3 registered. Jun 9 10:05:46 barney kernel: [1025969.039264] Btrfs loaded

Jun 8 00:00:00 dc1 nagios: CURRENT HOST STATE: localhost;UP;HARD;1;PING OK - Packet loss = 0%, RTA = 0.01 ms Jun 8 00:00:00 dc1 nagios: CURRENT SERVICE STATE: localhost;Current Load;OK;HARD;1;OK - load average: 0.00, 0.00, 0.00 Jun 8 00:00:00 dc1 nagios: CURRENT SERVICE STATE: localhost;Current Users;OK;HARD;1;USERS OK - 2 users currently logged in Jun 8 00:00:00 dc1 nagios: CURRENT SERVICE STATE: localhost;HTTP;CRITICAL;HARD;4;Connection refused Jun 8 00:00:00 dc1 nagios: CURRENT SERVICE STATE: localhost;PING;OK;HARD;1;PING OK - Packet loss = 0%, RTA = 0.01 ms Jun 8 00:00:00 dc1 nagios: CURRENT SERVICE STATE: localhost;Root Partition;OK;HARD;1;DISK OK - free space: / 107259 MB (49% inode=98%): Jun 8 00:00:00 dc1 nagios: CURRENT SERVICE STATE: localhost;SSH;OK;HARD;1;SSH OK - OpenSSH_4.3 (protocol 2.0)

And for reference here is the mysql format, which is also not configurable.

100605 16:43:38 mysqld_safe Starting mysqld daemon with databases from /opt/mysql51/data 100605 16:43:38 [Warning] '--log_slow_queries' is deprecated and will be removed in a future release. Please use ''--slow_query_log'/'--slow_query_log_fi le'' instead. 100605 16:43:38 [Warning] '--log' is deprecated and will be removed in a future release. Please use ''--general_log'/'--general_log_file'' instead. 100605 16:43:38 [Warning] No argument was provided to --log-bin, and --log-bin-index was not used; so replication may break when this MySQL server acts a s a master and has his hostname changed!! Please use '--log-bin=dc1-bin' to avoid this problem. /opt/mysql51/bin/mysqld: File './dc1-bin.index' not found (Errcode: 13) 100605 16:43:38 [ERROR] Aborting

However unlike other products including MySQL the next execution of the mongod process overwrites the log file. This will catch some administrators out. You need to remember to also add –logappend. Personally I’d prefer to see this is the default

$ mongod --logpath=`pwd`/mongo.log --logappend

I did observe some confusion on messaging. Using the mongo shell you get a jumble of logging messages during a shutdown.

$ mongo

MongoDB shell version: 1.4.3

url: test

connecting to: test

type "help" for help

> use admin

switched to db admin

> db.shutdownServer();

Fri Jun 11 13:54:08 query failed : admin.$cmd { shutdown: 1.0 } to: 127.0.0.1

server should be down...

Fri Jun 11 13:54:08 trying reconnect to 127.0.0.1

Fri Jun 11 13:54:08 reconnect 127.0.0.1 ok

Fri Jun 11 13:54:08 query failed : admin.$cmd { getlasterror: 1.0 } to: 127.0.0.1

Fri Jun 11 13:54:08 JS Error: Error: error doing query: failed (anon):1284

> exit

bye

This also results in an unformatted message in the log file for some reason.

$ tail mongo.log Fri Jun 11 13:54:08 shutdown: removing fs lock... Fri Jun 11 13:54:08 dbexit: really exiting now Fri Jun 11 13:54:08 got request after shutdown() ERROR: Client::~Client _context should be NULL: conn

Nothing of any critical nature however all important for system administrators that have monitoring scripts or using monitoring products.

MongoDB Experience: Key/Value Store

MongoDB is categorized as a schema-less, schema-free or a document orientated data store. Another category of NoSQL product is the key/value store. It had not dawned on me until a discussion with some of the 10gen employees that MongoDB is also a key/value store, this is just a subset of features.

How would you consider the design of a key/value store? Using the memached model, there are 4 primary attributes to consider:

- The Key to store/retrieve

- The Value for the given key

- An auto expiry of the cached data

- A key scope enabling multiple namespaces

There are three primary functions:

- Put a given Key/Value pair

- Get a given Key

- Delete a given Key

Let’s explore the options. The first is to create a new collection for each key. That way there is only one row per key,

> use keystore

> var d = new Date();

> var id = "key1";

> var kv = { key: id,val: "Hello World",expires: d}

> db.key1.save(kv);

> db.key1.find();

{ "_id" : ObjectId("4c126095c68fcaf3b0e07a2b"), "key" : "key1", "val" : "Hello World", "expires" : "Fri Jun 11 2010 12:09:51 GMT-0400 (EDT)" }

However when we start loading we run into a problem.

> db.key99999.save({key: "key99999", val: "Hello World", expires: new Date()})

too many namespaces/collections

> show collections;

Fri Jun 11 12:49:02 JS Error: uncaught exception: error: {

"$err" : "too much key data for sort() with no index. add an index or specify a smaller limit"

}

> db.stats()

{

"collections" : 13661,

"objects" : 26118,

"dataSize" : 2479352,

"storageSize" : 93138688,

"numExtents" : 13665,

"indexes" : 13053,

"indexSize" : 106930176,

"ok" : 1

}

I did read there was a limit on the number of collections at Using a Large Number of Collections.

Also for reference, I look at the underlying data files shows the ^2 increment of data files.

$ ls -lh data/current total 2.2G -rw------- 1 rbradfor rbradfor 64M 2010-06-11 12:45 keystore.0 -rw------- 1 rbradfor rbradfor 128M 2010-06-11 12:45 keystore.1 -rw------- 1 rbradfor rbradfor 256M 2010-06-11 12:46 keystore.2 -rw------- 1 rbradfor rbradfor 512M 2010-06-11 12:48 keystore.3 -rw------- 1 rbradfor rbradfor 1.0G 2010-06-11 12:48 keystore.4 -rw------- 1 rbradfor rbradfor 16M 2010-06-11 12:48 keystore.ns

> db.dropDatabase();

{ "dropped" : "keystore.$cmd", "ok" : 1 }

In my next test I’ll repeat by adding the key as a row or document for just one collection.

MongoDB Experience: Stats Example App

The best way to learn any new product is to a) read the manual, and b) start using the product.

I created a simple sample application so I could understand the various functions including adding data, searching as well as management functions etc. As with any good sample application using a source of data that already exists always makes life easier. For this example I’m going to use the Operating System output so I will have an ever increasing amount of output for no additional work.

I will be starting with a database called ‘stats’. For this database my first collection is going to be called ‘system’ and this is going to record the most basic of information including date/time, host and cpu (user,sys,idle) stats. I have a simple shell script that creates an appropriate JSON string and I use mongoimport to load the data. Here is my Version 0.1 architectural structure.

mongo> use stats;

mongo> db.system.findOne();

{

"_id" : ObjectId("4c11183580399ad2db4f503b"),

"host" : "barney",

"epoch" : 1276188725,

"date" : "Thu Jun 10 12:52:05 EDT 2010",

"cpu" : {

"user" : 2,

"sys" : 2,

"idle" : 95

},

"raw" : " 11435699 1379565 9072198 423130352 2024835 238766 2938641 0 0"

}

I made some initial design decisions before I understand the full strengths/limitation of MongoDB as well as what my actual access paths to data will be.

While I’m using a seconds since epoch for simple range searching, I’m adding a presentation date for user readability. I’ve created a different sub element for cpu, because it a) this element has a number of individual attributes I will want to report and search on, and b) this collection should be extended to include other information like load average, running processes, memory etc.

If my shell script runs in debug mode, I also record the raw data used to determine the end result. This makes debugging easier.

Here is my first query.

Find all statistics between two dates. It took a bit of getting the correct construct syntax correct, $le and $ge didn’t work so RTFM highlighted the correct syntax. I also first included elements for epoch, which resulted in a OR condition, I see you can add multiple comparison operators to a single element to get an AND operation.

mongo> db.system.find({epoch: { $gte: 1276188725, $lte: 1276188754}});

{ "_id" : ObjectId("4c11183580399ad2db4f503b"), "host" : "barney", "epoch" : 1276188725, "date" : "Thu Jun 10 12:52:05 EDT 2010", "cpu" : { "user" : 2, "sys" : 2, "idle" : 95 }, "raw" : " 11435699 1379565 9072198 423130352 2024835 238766 2938641 0 0" }

{ "_id" : ObjectId("4c11184c80399ad2db4f503c"), "host" : "barney", "epoch" : 1276188748, "date" : "Thu Jun 10 12:52:28 EDT 2010", "cpu" : { "user" : 2, "sys" : 2, "idle" : 95 }, "raw" : " 11436605 1379565 9072320 423138450 2024862 238770 2938641 0 0" }

{ "_id" : ObjectId("4c11185080399ad2db4f503d"), "host" : "barney", "epoch" : 1276188752, "date" : "Thu Jun 10 12:52:32 EDT 2010", "cpu" : { "user" : 2, "sys" : 2, "idle" : 95 }, "raw" : " 11437005 1379565 9072330 423139527 2024862 238770 2938641 0 0" }

{ "_id" : ObjectId("4c11185180399ad2db4f503e"), "host" : "barney", "epoch" : 1276188753, "date" : "Thu Jun 10 12:52:33 EDT 2010", "cpu" : { "user" : 2, "sys" : 2, "idle" : 95 }, "raw" : " 11437130 1379565 9072334 423139862 2024862 238770 2938641 0 0" }

{ "_id" : ObjectId("4c11185280399ad2db4f503f"), "host" : "barney", "epoch" : 1276188754, "date" : "Thu Jun 10 12:52:34 EDT 2010", "cpu" : { "user" : 2, "sys" : 2, "idle" : 95 }, "raw" : " 11437316 1379565 9072338 423140325 2024910 238770 2938641 0 0" }

Assuming I’m going to have stats from more then one server in my data, we should always filter by hostname, and then for given period.

mongo> db.system.find({host: "barney", epoch: { $gte: 1276188725, $lte: 1276188754}});

If I only want to see the Date/Time and CPU stats, I can show a subset of the elements found.

mongo> db.system.find({epoch: { $gte: 1276188725, $lte: 1276188754}}, {date:1,cpu:1});

{ "_id" : ObjectId("4c11183580399ad2db4f503b"), "date" : "Thu Jun 10 12:52:05 EDT 2010", "cpu" : { "user" : 2, "sys" : 2, "idle" : 95 } }

{ "_id" : ObjectId("4c11184c80399ad2db4f503c"), "date" : "Thu Jun 10 12:52:28 EDT 2010", "cpu" : { "user" : 2, "sys" : 2, "idle" : 95 } }

...

Filtering on a sub-element is also possible however I found that the representation of strings and numbers does not do an implied conversion. In the following example “2” does not match any results, while 2 does.

mongo> db.system.findOne({host: "barney", "cpu.user": "2"})

null

mongo> db.system.findOne({host: "barney", "cpu.user": 2})

{

"_id" : ObjectId("4c11161680399ad2db4f5033"),

"host" : "barney",

"epoch" : 1276188182,

"date" : "Thu Jun 10 12:43:02 EDT 2010",

"cpu" : {

"user" : 2,

"sys" : 2,

"idle" : 95

}

}

Given the collection and load process works, data is being recorded and I can perform some searching I now have the basis for adding additional rich data elements, learning about the internal DBA operations possible after I fix the bug with all my values being 2/2/95.

MongoDB Experience: Replication 101

After successfully installing and testing mongoDB it’s very easy to create a replication environment.

$ mkdir -p data/{master,slave}

$ mongod --dbpath=`pwd`/data/master --master --port 28011 > master.log 2>&1 &

# Always check your log file

$ cat master.log

$ mongod --dbpath=`pwd`/data/slave --slave --source localhost:28011 --port 28022 > slave.log 2>&1 &

$ cat slave.log

The options are relatively descriptive and straightforward.

- –dbpath – The directory for data (we set because we are running master/slave on same server)

- –port – Likewise we are running multiple instances on same machine

- –master – I’m the master

- –slave – I’m a slave

- –source – For slaves, tell them were the source (i.e. master is)

What I found under the covers was a difference from the single instance version. There is a series of ‘local’ files for the namespace, where in the single instance version there were ‘test’ files.

$ ls -ltR data total 0 drwxr-xr-x 6 rbradfor staff 204 Jun 10 10:24 slave drwxr-xr-x 5 rbradfor staff 170 Jun 10 10:22 master data/slave: total 163848 drwxr-xr-x 2 rbradfor staff 68 Jun 10 10:24 _tmp -rw------- 1 rbradfor staff 67108864 Jun 10 10:24 local.0 -rw------- 1 rbradfor staff 16777216 Jun 10 10:24 local.ns -rwxr-xr-x 1 rbradfor staff 6 Jun 10 10:24 mongod.lock data/slave/_tmp: data/master: total 163848 -rw------- 1 rbradfor staff 67108864 Jun 10 10:22 local.0 -rw------- 1 rbradfor staff 16777216 Jun 10 10:22 local.ns -rwxr-xr-x 1 rbradfor staff 6 Jun 10 10:22 mongod.lock

A quick replication test.

$ mongo --port 28011

MongoDB shell version: 1.4.3

url: test

connecting to: 127.0.0.1:28011/test

type "help" for help

> db.foo.save({s:"Hello world"});

> db.foo.find();

{ "_id" : ObjectId("4c10f7904a30c35548b0af06"), "s" : "Hello world" }

> exit

bye

$ mongo --port 28022

MongoDB shell version: 1.4.3

url: test

connecting to: 127.0.0.1:28022/test

type "help" for help

> db.foo.find();

{ "_id" : ObjectId("4c10f7904a30c35548b0af06"), "s" : "Hello world" }

> exit

A look now at the underlying data shows a ‘test’ namespace which confirms the lazy instantiation approach. The ‘local’ namespace files are obviously a reflection of the –master/–slave operation.

$ ls -ltR data total 0 drwxr-xr-x 9 rbradfor staff 306 Jun 10 10:32 slave drwxr-xr-x 8 rbradfor staff 272 Jun 10 10:32 master data/slave: total 589832 -rw------- 1 rbradfor staff 134217728 Jun 10 10:33 test.1 drwxr-xr-x 2 rbradfor staff 68 Jun 10 10:32 _tmp -rw------- 1 rbradfor staff 67108864 Jun 10 10:32 test.0 -rw------- 1 rbradfor staff 16777216 Jun 10 10:32 test.ns -rw------- 1 rbradfor staff 67108864 Jun 10 10:24 local.0 -rw------- 1 rbradfor staff 16777216 Jun 10 10:24 local.ns -rwxr-xr-x 1 rbradfor staff 6 Jun 10 10:24 mongod.lock data/master: total 327688 drwxr-xr-x 2 rbradfor staff 68 Jun 10 10:32 _tmp -rw------- 1 rbradfor staff 67108864 Jun 10 10:32 test.0 -rw------- 1 rbradfor staff 16777216 Jun 10 10:32 test.ns -rw------- 1 rbradfor staff 67108864 Jun 10 10:22 local.0 -rw------- 1 rbradfor staff 16777216 Jun 10 10:22 local.ns -rwxr-xr-x 1 rbradfor staff 6 Jun 10 10:22 mongod.lock

By default there appears to be no read-only default state for a slave. I was able to add new data to the slave.

$ mongo --port 28022

MongoDB shell version: 1.4.3

url: test

connecting to: 127.0.0.1:28022/test

type "help" for help

> db.foo.save({s:"Hello New York"});

> db.foo.find();

{ "_id" : ObjectId("4c10f7904a30c35548b0af06"), "s" : "Hello world" }

{ "_id" : ObjectId("4c10f864d8e80f1a1ad305cf"), "s" : "Hello New York" }

>

A closer look at this ‘local’ namespace and a check via the docs gives us details of the slave configuration.

$ mongo --port 28022

MongoDB shell version: 1.4.3

url: test

connecting to: 127.0.0.1:28022/test

type "help" for help

> show dbs;

admin

local

test

> use local;

switched to db local

> show collections;

oplog.$main

pair.sync

sources

system.indexes

> db.sources.find();

{ "_id" : ObjectId("4c10f5b633308f7c3d7afc45"), "host" : "localhost:28011", "source" : "main", "syncedTo" : { "t" : 1276180895000, "i" : 1 }, "localLogTs" : { "t" : 1276180898000, "i" : 1 } }

You can also with the mongo client connect directly to a collection via the command line.

$ mongo localhost:28022/local

MongoDB shell version: 1.4.3

url: localhost:28022/local

connecting to: localhost:28022/local

type "help" for help

> db.sources.find();

{ "_id" : ObjectId("4c10f5b633308f7c3d7afc45"), "host" : "localhost:28011", "source" : "main", "syncedTo" : { "t" : 1276180775000, "i" : 1 }, "localLogTs" : { "t" : 1276180778000, "i" : 1 } }

> exit

bye

The shell gives 3 convenience commands for showing replication state.

On the Slave

$ mongo --port 28022

> db.getReplicationInfo();

{

"logSizeMB" : 50,

"timeDiff" : 1444,

"timeDiffHours" : 0.4,

"tFirst" : "Thu Jun 10 2010 10:24:54 GMT-0400 (EDT)",

"tLast" : "Thu Jun 10 2010 10:48:58 GMT-0400 (EDT)",

"now" : "Thu Jun 10 2010 10:48:59 GMT-0400 (EDT)"

}

> db.printReplicationInfo();

configured oplog size: 50MB

log length start to end: 1444secs (0.4hrs)

oplog first event time: Thu Jun 10 2010 10:24:54 GMT-0400 (EDT)

oplog last event time: Thu Jun 10 2010 10:48:58 GMT-0400 (EDT)

now: Thu Jun 10 2010 10:49:07 GMT-0400 (EDT)

> db.printSlaveReplicationInfo();

source: localhost:28011

syncedTo: Thu Jun 10 2010 10:49:25 GMT-0400 (EDT)

= 1secs ago (0hrs)

On the master, the same commands are applicable, output basically the same.

$ mongo --port 28011

> db.getReplicationInfo();

{

"logSizeMB" : 50,

"timeDiff" : 1714,

"timeDiffHours" : 0.48,

"tFirst" : "Thu Jun 10 2010 10:22:01 GMT-0400 (EDT)",

"tLast" : "Thu Jun 10 2010 10:50:35 GMT-0400 (EDT)",

"now" : "Thu Jun 10 2010 10:50:40 GMT-0400 (EDT)"

}

> db.printReplicationInfo();

configured oplog size: 50MB

log length start to end: 1714secs (0.48hrs)

oplog first event time: Thu Jun 10 2010 10:22:01 GMT-0400 (EDT)

oplog last event time: Thu Jun 10 2010 10:50:35 GMT-0400 (EDT)

now: Thu Jun 10 2010 10:50:45 GMT-0400 (EDT)

> db.printSlaveReplicationInfo();

local.sources is empty; is this db a --slave?

>

From these commands there seems no obvious way to easily identify if an instance is a master or not.

References

DBA operations from shell

Replication

Master/Slave Replication

MongoDB Experience: Gotcha with collection names

In my earlier tests I bulk loaded data with the following command.

mongoimport -d olympics -c olympic_event -type tsv --headerline -f name,id,sport,demonstration_competitions,olympic_games_contested,competitions,contested_as_demonstration_event --drop olympic_event.tsv connected to: 127.0.0.1 dropping: olympics.olympic_event imported 775 objects

As you can see I imported 775 objects, however when I went to review them via the mongo interactive shell I found no data.

> use olympics; switched to db olympics > db.olypics.olympic_event.find(); # No results?

I was able to confirm these objects were in the namespace.

> db.system.namespaces.find();

{ "name" : "olympics.system.indexes" }

{ "name" : "olympics.demonstration_event_athlete_relationship" }

{ "name" : "olympics.demonstration_event_athlete_relationship.$_id_" }

{ "name" : "olympics.olympic_athlete" }

{ "name" : "olympics.olympic_athlete.$_id_" }

{ "name" : "olympics.olympic_athlete_affiliation" }

{ "name" : "olympics.olympic_athlete_affiliation.$_id_" }

{ "name" : "olympics.olympic_bidding_city" }

{ "name" : "olympics.olympic_bidding_city.$_id_" }

{ "name" : "olympics.olympic_city_bid" }

{ "name" : "olympics.olympic_city_bid.$_id_" }

{ "name" : "olympics.olympic_demonstration_competition" }

{ "name" : "olympics.olympic_demonstration_competition.$_id_" }

{ "name" : "olympics.olympic_demonstration_medal_honor" }

{ "name" : "olympics.olympic_demonstration_medal_honor.$_id_" }

{ "name" : "olympics.olympic_event" }

{ "name" : "olympics.olympic_event.$_id_" }

{ "name" : "olympics.olympic_event_competition" }

{ "name" : "olympics.olympic_event_competition.$_id_" }

{ "name" : "olympics.olympic_games" }

has more

> it

{ "name" : "olympics.olympic_games.$_id_" }

{ "name" : "olympics.olympic_host_city" }

{ "name" : "olympics.olympic_host_city.$_id_" }

{ "name" : "olympics.olympic_mascot" }

{ "name" : "olympics.olympic_mascot.$_id_" }

{ "name" : "olympics.olympic_medal" }

{ "name" : "olympics.olympic_medal.$_id_" }

{ "name" : "olympics.olympic_medal_demonstration" }

{ "name" : "olympics.olympic_medal_demonstration.$_id_" }

{ "name" : "olympics.olympic_medal_honor" }

{ "name" : "olympics.olympic_medal_honor.$_id_" }

{ "name" : "olympics.olympic_participating_country" }

{ "name" : "olympics.olympic_participating_country.$_id_" }

{ "name" : "olympics.olympic_sport" }

{ "name" : "olympics.olympic_sport.$_id_" }

{ "name" : "olympics.olympic_venue" }

{ "name" : "olympics.olympic_venue.$_id_" }

The problem is I was using the namespace object with db.find(), not the collection object. I am already in the database scope with the use command.

Knowing this I get what I expected with the correct collection name.

> db.olympic_event.find();

{ "_id" : ObjectId("4c0fb666a5cd86585be7c0fd"), "name" : "Men's Boxing, Super Heavyweight +91kg", "id" : "/guid/9202a8c04000641f8000000008d88df9", "sport" : "Boxing", "demonstration_competitions" : "", "olympic_games_contested" : "2008 Summer Olympics,1984 Summer Olympics,2000 Summer Olympics,2004 Summer Olympics,1988 Summer Olympics,1996 Summer Olympics,1992 Summer Olympics", "competitions" : "Boxing at the 1984 Summer Olympics - Super Heavyweight ,Boxing at the 2000 Summer Olympics - Super Heavyweight,Boxing at the 1988 Summer Olympics - Super Heavyweight ,Boxing at the 2004 Summer Olympics - Super Heavyweight,Boxing at the 1992 Summer Olympics - Super Heavyweight ,Boxing at the 2008 Summer Olympics - Super heavyweight,Boxing at the 1996 Summer Olympics - Super Heavyweight" }

{ "_id" : ObjectId("4c0fb666a5cd86585be7c0fe"), "name" : "Men's Judo, 60 - 66kg (half-lightweight)", "id" : "/guid/9202a8c04000641f8000000008d88d0e", "sport" : "Judo", "demonstration_competitions" : "", "olympic_games_contested" : "2004 Summer Olympics,2000 Summer Olympics,2008 Summer Olympics", "competitions" : "Judo at the 2008 Summer Olympics – Men's Half Lightweight (66 kg),Judo at the 2000 Summer Olympics - Men's Half Lightweight (66 kg),Judo at the 2004 Summer Olympics - Men's Half Lightweight (66 kg)" }

{ "_id" : ObjectId("4c0fb666a5cd86585be7c0ff"), "name" : "Men's Tennis, Indoor Singles", "id" : "/guid/9202a8c04000641f8000000010be70e8", "sport" : "Tennis", "demonstration_competitions" : "", "olympic_games_contested" : "1912 Summer Olympics,1908 Summer Olympics", "competitions" : "Tennis at the 1908 Summer Olympics - Men's Indoor Singles,Tennis at the 1912 Summer Olympics - Men's Indoor Singles" }

...

It’s interesting that a collection name can contain a fullstop ‘.’ which is the delimiter in the command syntax. In my earlier observation I was not getting an error, only an empty response. For example you can do this.

> use test;

switched to db test

> db.foo.x.save({a: 1});

> db.foo.x.find();

{ "_id" : ObjectId("4c0fc1784ff83a6831364d57"), "a" : 1 }

MongoDB Experience: What's running in the DB

You can very easily find out the running threads in the database (e.g. like a MySQL SHOW PROCESSLIST) with db.currentOp.

> db.currentOp();

{ "inprog" : [ ] }

No much happening, however under some load you can see

> db.currentOp();

{

"inprog" : [

{

"opid" : 27980,

"active" : true,

"lockType" : "write",

"waitingForLock" : false,

"secs_running" : 0,

"op" : "insert",

"ns" : "olympics.olympic_athlete",

"client" : "127.0.0.1:63652",

"desc" : "conn"

}

]

}

> db.currentOp();

{

"inprog" : [

{

"opid" : 57465,

"active" : true,

"lockType" : "write",

"waitingForLock" : false,

"secs_running" : 0,

"op" : "insert",

"ns" : "olympics.olympic_athlete_affiliation",

"client" : "127.0.0.1:63653",

"desc" : "conn"

}

]

}

I was able to see these when I was Bulk Loading Data

The HTTPConsole at http://localhost:28017/ (for default installation) also shows you all client connections as well as more information per thread, database uptime, replication status and a DBTOP for recent namespaces. For example:

mongodb mactazosx.local:27017

db version v1.4.3, pdfile version 4.5

git hash: 47ffbdfd53f46edeb6ff54bbb734783db7abc8ca

sys info: Darwin broadway.local 9.8.0 Darwin Kernel Version 9.8.0: Wed Jul 15 16:55:01 PDT 2009; root:xnu-1228.15.4~1/RELEASE_I386 i386 BOOST_LIB_VERSION=1_40

dbwritelocked: 0 (initial)

uptime: 2851 seconds

assertions:

replInfo:

Clients:

Thread OpId Active LockType Waiting SecsRunning Op NameSpace Query client msg progress

initandlisten 0 1 2004 test { name: /^local.temp./ } 0.0.0.0:0

snapshotthread 0 0 0 0.0.0.0:0

websvr 18 -1 2004 test._defaultCollection {} 0.0.0.0:0

conn 83741 -1 2004 olympics.olympic_host_city {} 127.0.0.1:63268

conn 83739 0 2004 ? { getlasterror: 1.0 } 127.0.0.1:63756

time to get dblock: 0ms

# databases: 3

Cursors byLoc.size(): 0

replication

master: 0

slave: 0

initialSyncCompleted: 1

DBTOP (occurences|percent of elapsed)

NS total Reads Writes Queries GetMores Inserts Updates Removes

GLOBAL 1 0.00% 1 0.00% 0 0.00% 1 0.00% 0 0.00% 0 0.00% 0 0.00% 0 0.00%

olympics.olympic_host_city 1 0.00% 1 0.00% 0 0.00% 1 0.00% 0 0.00% 0 0.00% 0 0.00% 0 0.00%

It was interesting to see a whatsmyuri command. Will need to investigate that further.

MongoDB Experience: Bulk Loading Data

mongoDB has a mongoimport command. The docs only shows the usage but not any examples. here are my first examples.

data1.csv

1 2 3 4 5 6 7 8 9 0

You need to specify your database (-d) and collection (-c) for importing. In my example, I also specified the collection fields with (-f)

The –file is actually optional, specifying the filename as the large argument also works.

mongoimport -d test -c foo -f a -type csv data connected to: 127.0.0.1 imported 10 objects

NOTE: The default type is JSON, so you can get some nasty errors if you forget the csv type.

Wed Jun 9 11:18:26 Assertion: 10340:Failure parsing JSON string near: 1 0x68262 0x23968 0x250563 0x251c7b 0x24cb00 0x250280 0x1af6 0 mongoimport 0x00068262 _ZN5mongo11msgassertedEiPKc + 514 1 mongoimport 0x00023968 _ZN5mongo8fromjsonEPKc + 520 2 mongoimport 0x00250563 _ZN6Import9parseLineEPc + 131 3 mongoimport 0x00251c7b _ZN6Import3runEv + 2635 4 mongoimport 0x0024cb00 _ZN5mongo4Tool4mainEiPPc + 2880 5 mongoimport 0x00250280 main + 496 6 mongoimport 0x00001af6 start + 54 exception:Failure parsing JSON string near: 1

In my second example I’m adding multiple fields. This time my data file also has a headers which you can ignore with (–headerline)

data2.csv

name, age Mickey Mouse,65 Minnie Mouse,64 Donald Duck, Taz Devil,22 Marvin the Martian,45

$ mongoimport -d test -c foo -f name,age -type csv --headerline data2.csv connected to: 127.0.0.1 imported 6 objects

> db.foo.find();

...

{ "_id" : ObjectId("4c0fb0dfa5cd86585be6ca63"), "a" : 0 }

{ "_id" : ObjectId("4c0fb2bea5cd86585be6ca64"), "name" : "Mickey Mouse", "age" : 65 }

{ "_id" : ObjectId("4c0fb2bea5cd86585be6ca65"), "name" : "Minnie Mouse", "age" : 64 }

{ "_id" : ObjectId("4c0fb2bea5cd86585be6ca66"), "name" : "Donald Duck" }

{ "_id" : ObjectId("4c0fb2bea5cd86585be6ca67"), "name" : "Taz Devil", "age" : 22 }

{ "_id" : ObjectId("4c0fb2bea5cd86585be6ca68"), "name" : "Marvin the Martian", "age" : 45 }

You can also use the –drop argument to truncate your collection before loading.

Real Data

I’m going to use the Freebase Olympics data to perform a more robust test.

wget http://download.freebase.com/datadumps/2010-04-15/browse/olympics.tar.bz2 bunzip2 olympics.tar.bz2 tar xvf olympics.tar cd olympics

Loading this data via the following convenience script gave me some more meaningful data.

> db.olympic_host_city.find();

{ "_id" : ObjectId("4c0fb666a5cd86585be7d9b6"), "name" : "Vancouver", "id" : "/guid/9202a8c04000641f80000000000401e2", "olympics_hosted" : "2010 Winter Olympics" }

{ "_id" : ObjectId("4c0fb666a5cd86585be7d9b7"), "name" : "Moscow", "id" : "/guid/9202a8c04000641f800000000002636c", "olympics_hosted" : "1980 Summer Olympics" }

{ "_id" : ObjectId("4c0fb666a5cd86585be7d9b8"), "name" : "St. Moritz", "id" : "/guid/9202a8c04000641f80000000001c33e8", "olympics_hosted" : "1948 Winter Olympics,1928 Winter Olympics" }

...

Here is the simple load script I used.

#!/bin/sh

load_file() {

local INPUT_FILE=$1

[ -z "${INPUT_FILE}" ] && echo "ERROR: File not specified" && return 1

echo "Loading file ${INPUT_FILE}"

COLLECTION=`echo ${INPUT_FILE} | cut -d. -f1`

FIELDS=`head -1 ${INPUT_FILE} | sed -e "s/ /,/g;s/ /_/g"`

echo "mongoimport -d olympics -c ${COLLECTION} -type tsv --headerline -f $FIELDS --drop ${INPUT_FILE}"

time mongoimport -d olympics -c ${COLLECTION} -type tsv --headerline -f $FIELDS --drop ${INPUT_FILE}

return 0

}

process_dir() {

echo "Processing" `pwd`

for FILE in `ls *.tsv`

do

load_file ${FILE}

done

return 0

}

main() {

process_dir

}

main $*

exit 0

MongoDB Experience: Online Resources

Following the initial Quickstart docs you will find a lot of online documentation. The following are good places to start.

There is also a Getting Started however I found this a duplication of the Quickstart. I have not found an offline version of the manual, or a single HTML page version. This makes it a little difficult for reading without internet connectivity.

You can find information at the Official Blog. While I found no Blog Roll, one developer Kyle Banker has a blog.

There are several Mailing Lists available however It seems your best help may be via IRC at irc://irc.freenode.net/#mongodb I found over 200 members on the channel.

There are currently no books on MongoDB however there seems to be 4 books in the making.

MongoDB Experience: Getting Started

Getting started with MongoDB is relatively straight forward, following the instructions from the Quickstart guide has you operational in a few minutes.

I like projects that provide a latest version link for software. There is no need to update any documentation or blog posts over time. The current instructions require some additional steps when creating the initial data directory, due to normal permissions of the root directory. This is the only pre-requisite to using the software out of the box. There is no additional configuration required for a default installation.

$ sudo mkdir /data/db $ sudo chown `id -u` /data/db

I ran a few boundary tests to verify the error handling of the initial startup process.

The following occurs when the data directory does not exist.

$ ./mongodb-osx-i386-1.4.3/bin/mongod ./mongodb-osx-i386-1.4.3/bin/mongod --help for help and startup options Tue Jun 8 15:59:52 Mongo DB : starting : pid = 78161 port = 27017 dbpath = /data/db/ master = 0 slave = 0 32-bit ** NOTE: when using MongoDB 32 bit, you are limited to about 2 gigabytes of data ** see http://blog.mongodb.org/post/137788967/32-bit-limitations for more Tue Jun 8 15:59:52 Assertion: 10296:dbpath (/data/db/) does not exist 0x68572 0x247814 0x24821a 0x24a855 0x1e06 0 mongod 0x00068572 _ZN5mongo11msgassertedEiPKc + 514 1 mongod 0x00247814 _ZN5mongo14_initAndListenEiPKc + 548 2 mongod 0x0024821a _ZN5mongo13initAndListenEiPKc + 42 3 mongod 0x0024a855 main + 4917 4 mongod 0x00001e06 start + 54 Tue Jun 8 15:59:52 exception in initAndListen std::exception: dbpath (/data/db/) does not exist, terminating Tue Jun 8 15:59:52 dbexit: Tue Jun 8 15:59:52 shutdown: going to close listening sockets... Tue Jun 8 15:59:52 shutdown: going to flush oplog... Tue Jun 8 15:59:52 shutdown: going to close sockets... Tue Jun 8 15:59:52 shutdown: waiting for fs preallocator... Tue Jun 8 15:59:52 shutdown: closing all files... Tue Jun 8 15:59:52 closeAllFiles() finished Tue Jun 8 15:59:52 dbexit: really exiting now

The following error occurs when the user has insufficient permissions for the directory.

$ sudo mkdir /data/db $ ./mongodb-osx-i386-1.4.3/bin/mongod ./mongodb-osx-i386-1.4.3/bin/mongod --help for help and startup options Tue Jun 8 16:01:52 Mongo DB : starting : pid = 78178 port = 27017 dbpath = /data/db/ master = 0 slave = 0 32-bit ** NOTE: when using MongoDB 32 bit, you are limited to about 2 gigabytes of data ** see http://blog.mongodb.org/post/137788967/32-bit-limitations for more Tue Jun 8 16:01:52 User Exception 10309:Unable to create / open lock file for dbpath: /data/db/mongod.lock Tue Jun 8 16:01:52 exception in initAndListen std::exception: Unable to create / open lock file for dbpath: /data/db/mongod.lock, terminating Tue Jun 8 16:01:52 dbexit: Tue Jun 8 16:01:52 shutdown: going to close listening sockets... Tue Jun 8 16:01:52 shutdown: going to flush oplog... Tue Jun 8 16:01:52 shutdown: going to close sockets... Tue Jun 8 16:01:52 shutdown: waiting for fs preallocator... Tue Jun 8 16:01:52 shutdown: closing all files... Tue Jun 8 16:01:52 closeAllFiles() finished Tue Jun 8 16:01:52 shutdown: removing fs lock... Tue Jun 8 16:01:52 couldn't remove fs lock errno:9 Bad file descriptor Tue Jun 8 16:01:52 dbexit: really exiting now

A missing step from the existing documentation is to set appropriate permissions to the data directory so that mongod run as your normal user can write to the directory.

$ sudo chown `id -u` /data/db $ ./mongodb-osx-i386-1.4.3/bin/mongod Tue Jun 8 16:06:37 Mongo DB : starting : pid = 78284 port = 27017 dbpath = /data/db/ master = 0 slave = 0 32-bit ** NOTE: when using MongoDB 32 bit, you are limited to about 2 gigabytes of data ** see http://blog.mongodb.org/post/137788967/32-bit-limitations for more Tue Jun 8 16:06:37 db version v1.4.3, pdfile version 4.5 Tue Jun 8 16:06:37 git version: 47ffbdfd53f46edeb6ff54bbb734783db7abc8ca Tue Jun 8 16:06:37 sys info: Darwin broadway.local 9.8.0 Darwin Kernel Version 9.8.0: Wed Jul 15 16:55:01 PDT 2009; root:xnu-1228.15.4~1/RELEASE_I386 i386 BOOST_LIB_VERSION=1_40 Tue Jun 8 16:06:37 waiting for connections on port 27017 Tue Jun 8 16:06:37 web admin interface listening on port 28017

You are then ready to rock and roll. Use the exit command to exit the mongo interactive shell.

$ ./mongodb-osx-i386-1.4.3/bin/mongo

> db.foo.save ({a:2});

> db.foo.find();

{ "_id" : ObjectId("4c0ea308f5ea2f7148a33b9f"), "a" : 2 }

> exit

bye

The mongod output of the first save of data into the “foo” collection shows a little of how mongodb operations. There is a lazy instantiation of “test” database when first required. There is no need to first create this, or use this as with MySQL.

Tue Jun 8 16:07:36 allocating new datafile /data/db/test.ns, filling with zeroes...

Tue Jun 8 16:07:36 done allocating datafile /data/db/test.ns, size: 16MB, took 0.05 secs

Tue Jun 8 16:07:37 allocating new datafile /data/db/test.0, filling with zeroes...

Tue Jun 8 16:07:38 done allocating datafile /data/db/test.0, size: 64MB, took 1.282 secs

Tue Jun 8 16:07:40 building new index on { _id: 1 } for test.foo

Tue Jun 8 16:07:40 Buildindex test.foo idxNo:0 { name: "_id_", ns: "test.foo", key: { _id: 1 } }

Tue Jun 8 16:07:40 done for 0 records 0.018secs

Tue Jun 8 16:07:40 insert test.foo 3181ms

$ ls -l /data/db total 163848 drwxr-xr-x 2 rbradfor admin 68 Jun 8 16:07 _tmp -rwxr-xr-x 1 rbradfor admin 6 Jun 8 16:06 mongod.lock -rw------- 1 rbradfor admin 67108864 Jun 8 16:07 test.0 -rw------- 1 rbradfor admin 16777216 Jun 8 16:07 test.ns

One observation is the output of the mongod is more a trace output. I have yet to see any information about a more appropriately formatted error log.

MongoDB Experience: History

My first exposure to MongoDB was in July 2008 when I was a panelist on “A Panel on Cloud Computing” at the Entrepreneurs Round Table in New York. The panel included a representative from 10gen the company behind the open source database product and at the time Mongo was described as a full stack solution with the database being only one future component.

While I mentioned Mongo again in a blog in Nov 2008, it was not until Oct 6 2009 at the NoSQL event in New York where I saw a more stable product and a revised focus of development just on the database component.

As the moderator for the closing keynote “SQL v NOSQL” panel at Open SQL Camp 2009 in Portland, Oregon I had the chance to discuss MongoDB with the other products in the NoSQL space. Watch Video

In just the past few weeks, 3 people independently have mentioned MongoDB and asked for my input. I was disappointed to just miss the MongoNYC 2010 event.

While I have evaluated various new products in the key/value store and the schemaless space, my curiosity has been initially more with Cassandra and CouchDB.

Follow my journey as I explore in more detail the usage of mongoDB {name: “mongo”, type:”db”} via the mongodb tag on my blog.

Oracle resources for the MySQL Community

While I have spent a lot of time recently helping the MySQL community interact with and integrate with various Oracle User Groups including ODTUG, IOUG, NoCOUG, NYOUG, DAOG I thought I’d share some resources for the MySQL Community that wanted to know more about Oracle.

The Oracle family of products is huge. You only have to look at the acquisitions via Wikipedia to get an idea. The first thing is to narrow your search, e.g. Database, APEX, Middleware, BI, Hyperion, Financials, development via Java, PHP or Oracle Forms etc.

While Oracle is a commercial product you can download all software for FREE via Oracle Technology Network. There is also documentation, forums, blogs and events.

Some Oracle bloggers I have already been reading however I’m expanding my list. People you may want to consider include:

Cary Millsap,Lewis Cunningham, Debra Lilley, Dimitri Gielis,Duncan Mills, Edward Roske, Mark Rittman, Scott Spendolini, Tim Tow, Tom Kyte

If you want a comparison of the Oracle and MySQL community, be sure to also check out Sheeri Cabral’s keynote address at the 2010 MySQL User Conference for reference.

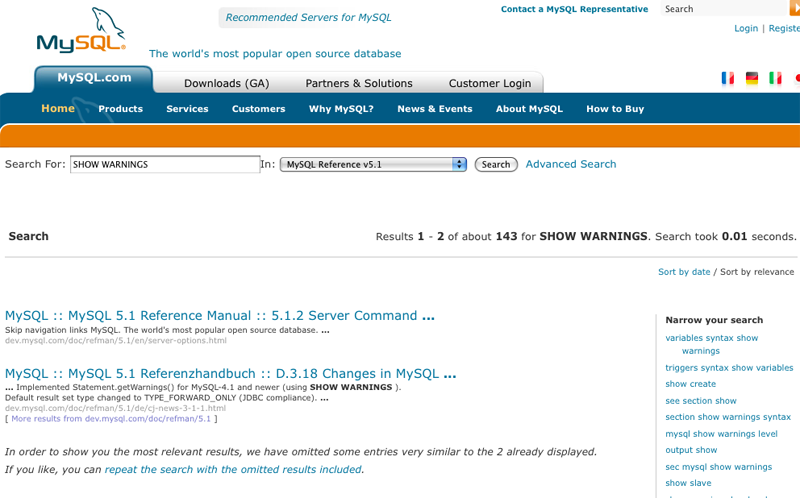

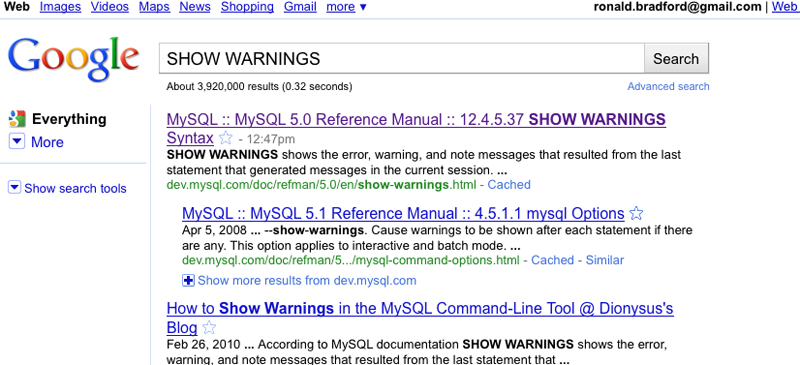

Why is MySQL documentation search so wrong?

I just don’t get this I don not know what the technology is behind the search box at MySQL Documentation but it annoys me when I want to see the syntax of a command and Search can’t find the page, when a dedicated page exists and I’m using the exact syntax of both the command the title of the page. In this example the search was for SHOW WARNINGS.

Google find the page as first link!

I'll have a MySQL shot to go!

Wednesday night of the MySQL track of ODTUG Kaleidoscope will include an evening with Last Comic Standing comedian, John Heffron. It should be great way to unwind after day 3 of the conference. Black vodka anybody.

Check out the MySQL Schedule for more information of presentations for the 4 days. More details is also available here.

When SET GLOBAL affects SESSION scope

We have all been caught out with using SET and not realizing that the default GLOBAL Scope (since 5.0.2) does not change the current SESSION scope.

I was not aware until today that changing GLOBAL scope has some exceptions that also automatically affect SESSION scope.

What I expected with a change in the GLOBAL scope is no affect SESSION scope. For example.

mysql> SHOW GLOBAL VARIABLES LIKE 'read_buffer_size'; +------------------+--------+ | Variable_name | Value | +------------------+--------+ | read_buffer_size | 131072 | +------------------+--------+ 1 row in set (0.00 sec) mysql> SHOW SESSION VARIABLES LIKE 'read_buffer_size'; +------------------+--------+ | Variable_name | Value | +------------------+--------+ | read_buffer_size | 131072 | +------------------+--------+ 1 row in set (0.00 sec) mysql> SET GLOBAL read_buffer_size=1024*256; Query OK, 0 rows affected (0.00 sec) mysql> SHOW GLOBAL VARIABLES LIKE 'read_buffer_size'; +------------------+--------+ | Variable_name | Value | +------------------+--------+ | read_buffer_size | 262144 | +------------------+--------+ 1 row in set (0.00 sec) mysql> SHOW SESSION VARIABLES LIKE 'read_buffer_size'; +------------------+--------+ | Variable_name | Value | +------------------+--------+ | read_buffer_size | 131072 | +------------------+--------+ 1 row in set (0.00 sec)

However I was no prepared for this when changing an important variable for transaction management.

mysql> SHOW GLOBAL VARIABLES LIKE 'autocommit'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | autocommit | ON | +---------------+-------+ 1 row in set (0.00 sec) mysql> SHOW SESSION VARIABLES LIKE 'autocommit'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | autocommit | ON | +---------------+-------+ 1 row in set (0.00 sec) mysql> SET autocommit=0; Query OK, 0 rows affected (0.00 sec) mysql> SHOW GLOBAL VARIABLES LIKE 'autocommit'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | autocommit | OFF | +---------------+-------+ 1 row in set (0.00 sec) mysql> SHOW SESSION VARIABLES LIKE 'autocommit'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | autocommit | OFF | +---------------+-------+ 1 row in set (0.00 sec)

However even more perplexing was the following message:

mysql> SET GLOBAL autocommit=0; ERROR 1228 (HY000): Variable 'autocommit' is a SESSION variable and can't be used with SET GLOBAL

So this is another case were the definition of variables is not applicable in a GLOBAL level, yet the tools of the trade represent in some manner misleading information.

To prove my point, here is another new concurrent session started after the above.

mysql> SHOW GLOBAL VARIABLES LIKE 'autocommit'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | autocommit | ON | +---------------+-------+ 1 row in set (0.01 sec) mysql> SHOW SESSION VARIABLES LIKE 'autocommit'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | autocommit | ON | +---------------+-------+ 1 row in set (0.00 sec)

The MySQL Documentation also had an incorrect specification with description regarding this scope, Bug #54215

Best Practices: Additional User Security

By default MySQL allows you to create user accounts and privileges with no password. In my earlier MySQL Best Practices: User Security I describe how to address the default installation empty passwords.

For new user accounts, you can improve this default behavior using the SQL_MODE variable, with a value of NO_AUTO_CREATE_USER. As detailed via the 5.1 Reference Manual

NO_AUTO_CREATE_USER

Prevent the GRANT statement from automatically creating new users if it would otherwise do so, unless a nonempty password also is specified.

Having set this variable I attempted to show the error of operation to demonstrate in my upcoming “MySQL Idiosyncrasies that bite” presentation.

Confirm Settings

mysql> show global variables like 'sql_mode'; +---------------+---------------------+ | Variable_name | Value | +---------------+---------------------+ | sql_mode | NO_AUTO_CREATE_USER | +---------------+---------------------+ 1 row in set (0.00 sec) mysql> show session variables like 'sql_mode'; +---------------+---------------------+ | Variable_name | Value | +---------------+---------------------+ | sql_mode | NO_AUTO_CREATE_USER | +---------------+---------------------+ 1 row in set (0.00 sec)

Create error condition

mysql> CREATE USER superuser@localhost; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL ON *.* TO superuser@localhost; Query OK, 0 rows affected (0.00 sec) mysql> exit

What the? Surely this isn’t right.

$ mysql -usuperuser mysql> SHOW GRANTS; +--------------------------------------------------------+ | Grants for superuser@localhost | +--------------------------------------------------------+ | GRANT ALL PRIVILEGES ON *.* TO 'superuser'@'localhost' | +--------------------------------------------------------+ mysql> SELECT VERSION(); +-----------+ | VERSION() | +-----------+ | 5.1.39 | +-----------+

Well that’s broken functionality.

What should happen as described in Bug #43938 is a cryptic message as reproduced below.

mysql> GRANT SELECT ON foo.* TO 'geert12'@'localhost'; ERROR 1133 (42000): Can't find any matching row in the user table mysql> GRANT SELECT ON *.* TO geert12@localhost IDENTIFIED BY 'foobar'; Query OK, 0 rows affected (0.00 sec)

It seems however that the user of CREATE USER first nullifies this expected behavior, hence new Bug #54208.

Eventually consistent Group Commit

Having just written an interview response about NoSQL concepts for a RDBMS audience it was poetic that an inconspicuous title “(4 of 3)” highlights that both a MySQL read scalable implementation via replication and a NoSQL solution can share a common lack of timely consistency of data. For the sake of Group Commit I hope my data is always consistent at some location at some point in time as soon as possible.

In attempting to comment to Kristian Nielsen’s Fixing MySQL group commit (part 4 of 3) I was forced to watch an ad before I could even add a comment. Go jump Live Journal, it’s quicker to write my own blog post.

And if anybody is still reading, I had just written the following.

“There is clearly a place for NoSQL solutions. The two primary types of products are a key/value store and a schema-less solution. You need to learn the strengths, benefits and weaknesses of both. For a RDBMS resource the lack of transactions, the lack of joins and the concept of eventually consistent can take some time to accept.”

mk-query-digest Tips – Showing all hosts & users

The Maatkit tools provide a suite of additional MySQL commands. There is one command I use constantly and that is mk-query-digest.

Unfortunately the documentation does leave a lot to be desired for usability. While throughout, it is a man page and not a user guide. Several of us have discussed writing better documentation however it’s always a matter of time. I have however learned a number of tips and I’d like to share them in smaller digests.

The first is showing additional display. Maatkit works on truncating per line output to a reasonable length of 73 characters?

One of those lines is the list of hosts that connected to MySQL for a query, for example.

# Hosts 4 192.168.40... (2), 192.168.40... (2)... 2 more # Hosts 3 99.99.245.14 (12), 999.106.206.167 (6)... 1 more

The problem is I want to know what that 1 more is so I can gather a complete list of IP addresses that connect to this server. You do that with the –show-all=host argument.

Without

$ cat external.tcpdump | ./mk-query-digest --type tcpdump | grep Hosts | uniq -c

#

1 # Hosts 3 99.99.245.14 (12), 999.106.206.167 (6)... 1 more

1 # Hosts 1 99.99.139.140

With

$ cat external.tcpdump | ./mk-query-digest --type tcpdump --show-all=host | grep Hosts | uniq -c

1 # Hosts 3 99.99.245.14 (12), 999.106.206.167 (6), 99.99.139.140 (2)

1 # Hosts 1 99.99.139.140

You can apply the same principle to the Users as well with –show-all=user

$ cat external.tcpdump | ./mk-query-digest --type tcpdump --show-all=user | grep Users | uniq -c

1 # Users 2 xxx (13), phpmysqlmo... (5)

49 # Users 1 xxx

The problem is a still gett a truncation of the name ‘phpmysqlmo…’ That’s the one thing I’m trying to uncover, because that IP and usernme are not valid permissions for this system.

ImageMagick on Mac OS X

Wanting to do some image manipulation I realized my Linux scripts don’t run under Mac OS X, as ImageMagick is not installed via my MacPorts.

However installation failed:

$ sudo port install imagemagick ---> Computing dependencies for ImageMagick ---> Verifying checksum(s) for xorg-libX11 Error: Checksum (md5) mismatch for libX11-1.3.3.tar.bz2 Error: Checksum (sha1) mismatch for libX11-1.3.3.tar.bz2 Error: Checksum (rmd160) mismatch for libX11-1.3.3.tar.bz2 Error: Target org.macports.checksum returned: Unable to verify file checksums Error: The following dependencies failed to build: xorg-libXext xorg-libX11 xorg-libXt xorg-libsm xorg-libice Error: Status 1 encountered during processing. Before reporting a bug, first run the command again with the -d flag to get complete output.

Figuring that some of my packages may require upgrade:

$ sudo port selfupdate sudo port -d upgrade outdated

The problem is this all failed. Turning to the FAQ it seemed what I needed to do was remove and re-install the offending package receiving the checksum error via the following syntax.

$ sudo port clean --all$ sudo port install

It seemed I had to do this for several packages manually however in the end removing and installing a number of packages addressed the problem and now ImageMagick is happily running on Mac OS X

bash-3.2$ sudo port clean --all xorg-libX11 ---> Cleaning xorg-libX11 bash-3.2$ sudo port install xorg-libX11 ----> Computing dependencies for ImageMagick ---> Verifying checksum(s) for xorg-libX11 Error: Checksum (md5) mismatch for libX11-1.3.3.tar.bz2 Error: Checksum (sha1) mismatch for libX11-1.3.3.tar.bz2 Error: Checksum (rmd160) mismatch for libX11-1.3.3.tar.bz2 Error: Target org.macports.checksum returned: Unable to verify file checksums Error: The following dependencies failed to build: xorg-libXext xorg-libX11 xorg-libXt xorg-libsm xorg-libice Error: Status 1 encountered during processing. Before reporting a bug, first run the command again with the -d flag to get complete output. bash-3.2$ sudo port clean --all libX11 Error: Port libX11 not found Before reporting a bug, first run the command again with the -d flag to get complete output. bash-3.2$ sudo port clean --all xorg-libX11 ---> Cleaning xorg-libX11 bash-3.2$ sudo port install xorg-libX11