I had to laugh (just a bit) at this on the exhibitor floor at Oracle Open World 2013. There was a large MongoDB presence at the Slot 301. There are a few reasons.

First, the identity crisis remains. There is no MongoDB in the list of exhibitors, it’s 10gen, but where is the 10gen representation in the sign. 99.99% of attendees would not know this.

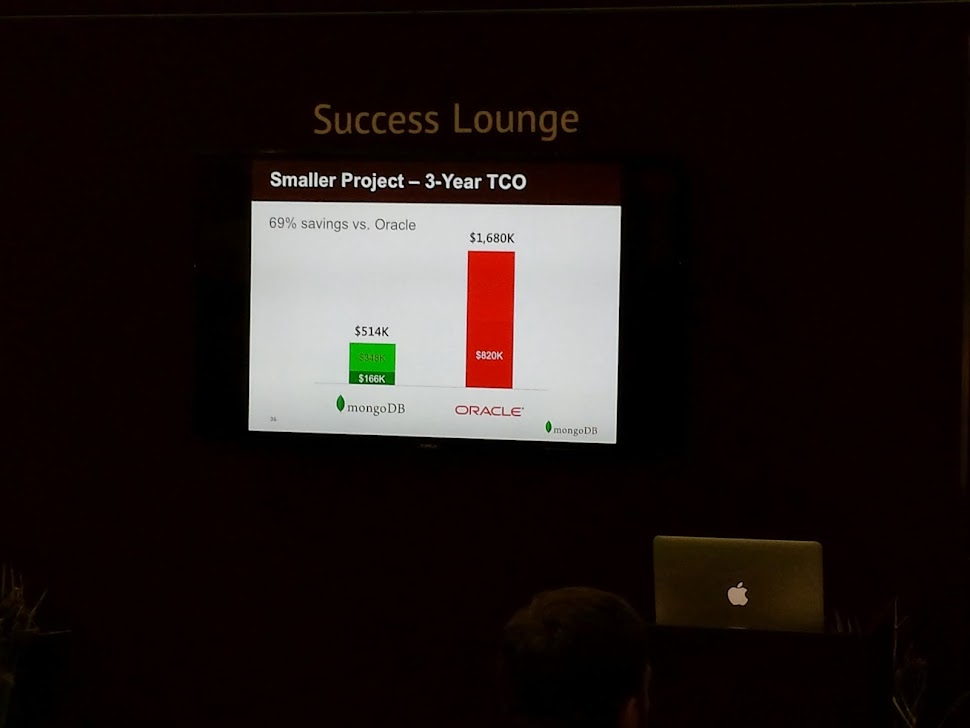

Second, the first and only slide I saw (as shown below), tries to directly compare implementing a solution to Oracle. The speaker made some comment but I really zoned out quickly. Having worked with MongoDB, even on one of my own projects, contemplated the ROI of being proficient in this for consulting, even discussing at length with the CEO and CTO, and hearing only issues with MongoDB with existing MySQL clients, I have come to the conclusion that MongoDB is a niche product. It’s great for a very particular situation, and absolutely not suitable for general use to replace a relational database (aka something with transactions to start with). A young and eager 10gen employee approached me, all excited to convince me of the savings. My first question to him was, how long have you been working at 10gen? After he responded 6 months, I informed him that I knew more about his product and specifically the ecosystem he was now in.

Finally, it was rather sad to think that 10gen/MongoDB was not interested in exhibiting in the MySQL Connect conference, a competitor product in it’s space. They obviously feel that MySQL is dead, and no longer even a viable competitor in the market space.

I have nothing personal against MongoDB, and it continues to mature as a product, however it’s a niche product with some strengths over a RDBMS in a minority of points. It definitely is not the right product for general OLTP applications.