SQL drift frequently occurs during:

- On-premises to cloud migrations

- Cloud to managed service transitions

- Cross-product migrations (e.g., switching database vendors)

- Database version upgrades

- Platform modernization efforts

The implications of SQL drift can be significant, leading to application instability, increased operational costs, and delayed migration timelines. The impact often extends to compromised data quality and results in a degraded user experience as systems become less reliable and responsive. Successfully managing SQL drift involves four key stages:

- Identification

- Prioritization

- Correction

- Validation

Identification is the critical first step in managing SQL drift, focusing on systematically discovering potential issues. This phase involves detecting SQL statements that may behave differently in the new environment, analyzing syntax compatibility across platforms, establishing performance baselines, and validating data outputs to ensure consistency.

Prioritization involves evaluating SQL drift issues based on business impact, risk assessment, resource allocation, and migration scheduling to determine the optimal order for resolution.

Correction addresses SQL drift through code remediation, performance optimization, syntax updates, and developing alternative solutions when necessary.

Validation confirms SQL drift corrections through comprehensive testing, performance verification against established baselines, and data integrity checks to ensure the corrected SQL maintains its intended functionality.

An effective way to demonstrate the impact of SQL drift is by using a sample collection of SQL statements executed across different versions of MySQL. The End of Life (EOL) for MySQL 5.7, coupled with AWS RDS and AWS RDS Aurora beginning extended support in 2024, has increased costs for organizations that are not proactive in managing database migrations. This situation is particularly common in development-focused teams that lack dedicated architecture and operations resources.

A MySQL demonstration of SQL Drift

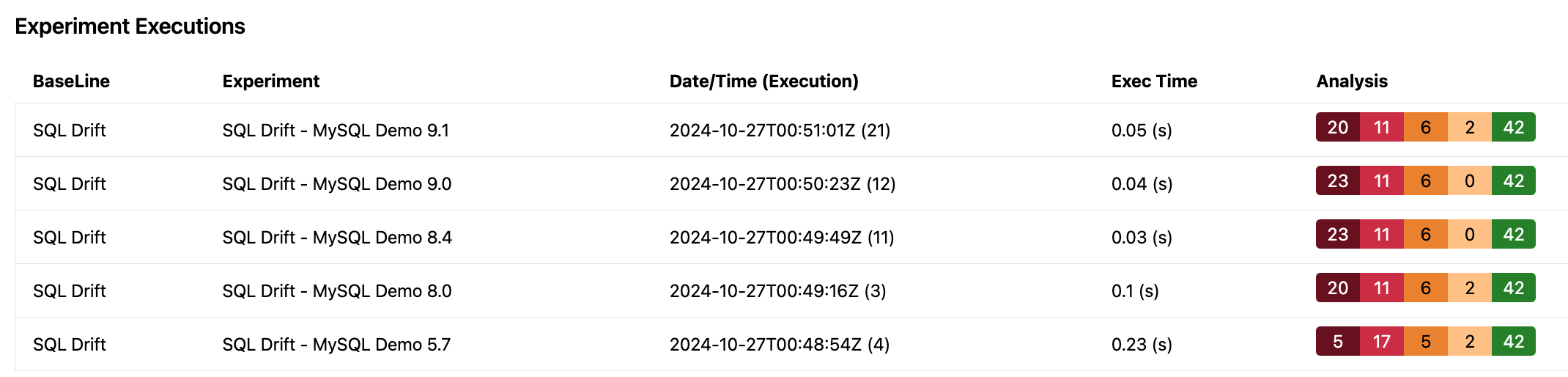

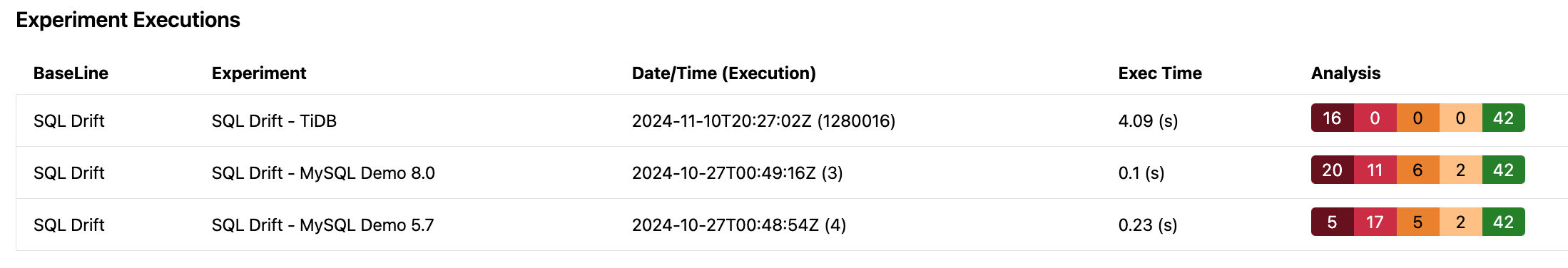

Using a subset of SQL statements executed in MySQL 5.7 and subsequent MySQL versions 8.0, 8.4, 9.0, and 9.1,Next BaseLine can examine the impact of SQL drift. This output shows the changing state of errors, deprecations, warnings and notices for the 42 example SQL statements.

Example Output from Next BaseLine

In MySQL 5.7, the use of the keyword SQL_NO_CACHE in an SQL statement presents as a deprecated warning.

17 Deprecations ID: 5, Hash: f31f2e99b2 SQL: "SELECT SQL_NO_CACHE 1;" Deprecation: (1681) 'SQL_NO_CACHE' is deprecated and will be removed in a future release.

In MySQL 8.0, the MySQL Query Cache is removed, however the use of SQL_NO_CACHE in SQL statements is still valid. Even in the next GA version, 8.4, this SQL keyword is still on the deprecated list, and it continues to deprecated in the current 9.1 innovation release.

A different example of deprecated functions are ENCRYPT and DES_ENCRYPT.

ID: 17, Hash: 947fcef53a

SQL: "SELECT ENCRYPT('BaseLine',1);"

Deprecation: (1287) 'ENCRYPT' is deprecated and will be removed in a future release. Please use AES_ENCRYPT instead

ID: 18, Hash: 364c0ffbf4

SQL: "SELECT DES_ENCRYPT('BaseLine');"

Deprecation: (1287) 'DES_ENCRYPT' is deprecated and will be removed in a future release. Please use AES_ENCRYPT instead

In MySQL 8.0, these SQL statements produce a hard error. These actually present as internal functions that are not present in the schema used rather than a “FUNCTION does not exist”. (More on this later).

ID: 17, Hash: 947fcef53a

SQL: "SELECT ENCRYPT('BaseLine',1);"

Error 1370 (42000): execute command denied to user 'nextbaseline'@'%' for routine 'airport.ENCRYPT'

ID: 18, Hash: 364c0ffbf4

SQL: "SELECT DES_ENCRYPT('BaseLine');"

Error 1370 (42000): execute command denied to user 'nextbaseline'@'%' for routine 'airport.DES_ENCRYPT'

Some example GIS SQL statements that in MySQL 5.7 present as deprecated, however they each are a different error number.

ID: 19, Hash: f319748e0c

SQL: "SELECT CONTAINS(ST_GeomFromText('POLYGON((0 0, 0 10, 10 10, 10 0, 0 0))'), ST_GeomFromText('POINT(5 5)'));"

Deprecation: (1287) 'CONTAINS' is deprecated and will be removed in a future release. Please use MBRCONTAINS instead

ID: 20, Hash: d686267b19

SQL: "SELECT ST_GeomFromWKB(Point(0, 0));"

Deprecation: (3195) st_geometryfromwkb(geometry) is deprecated and will be replaced by st_srid(geometry, 0) in a future version. Use st_geometryfromwkb(st_aswkb(geometry), 0) instead.

In MySQL 8.0+, these two deprecated statements produce different error messages.

ID: 19, Hash: f319748e0c

SQL: "SELECT CONTAINS(ST_GeomFromText('POLYGON((0 0, 0 10, 10 10, 10 0, 0 0))'), ST_GeomFromText('POINT(5 5)'));"

Error 1064 (42000): You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near '(ST_GeomFromText('POLYGON((0 0, 0 10, 10 10, 10 0, 0 0))'), ST_GeomFromText('POI' at line 1

ID: 20, Hash: d686267b19

SQL: "SELECT ST_GeomFromWKB(Point(0, 0));"

Error 3037 (22023): Invalid GIS data provided to function st_geomfromwkb.

Migrating a WordPress site

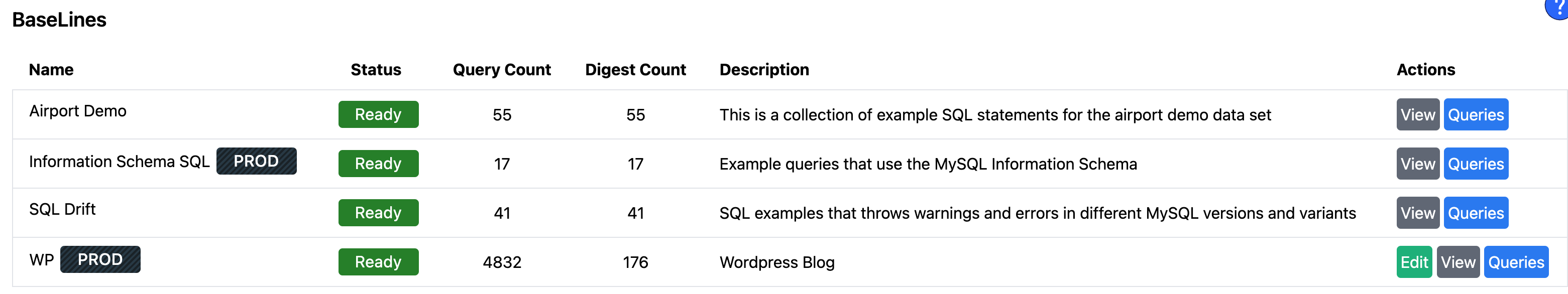

A more realistic example would involve taking production workload, such as WordPress running on a self-hosted MySQL 5.7 server, and assessing the potential impact of switching to MySQL 8.0 without upgrading the application (not a recommended approach). We have collected representative Production SQL statements for this WordPress setup, referred to as a BaseLine

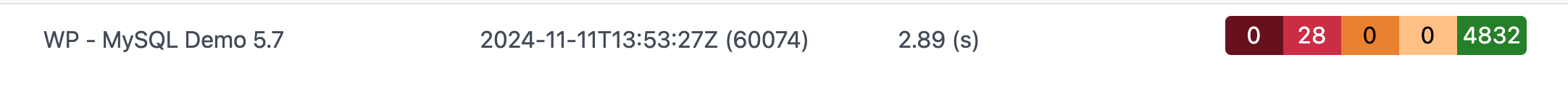

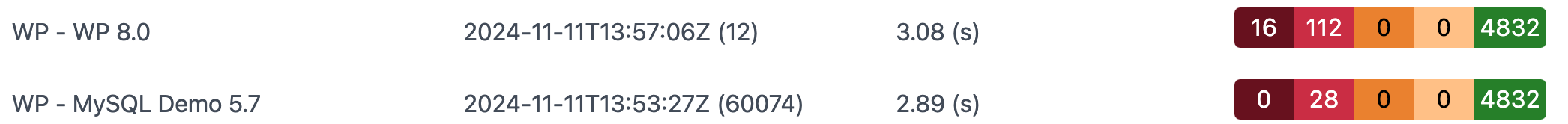

After collecting SQL traffic and testing this workload against a MySQL 5.7 environment, previously unnoticed SQL warnings were highlighted for the team.

When executed against an upgraded MySQL 8.0 instance, problematic SQL statements were immediately identified. For a larger, more complex product, this process would help prioritize where resources are most needed.

A modern cloud database implementation

Finally, let’s consider TiDB from PingCap as an example of validating your application with a cloud implementation. Using the same small set of 42 SQL statements, TiDB has taken a proactive approach by entirely eliminating warnings in their MySQL protocol. In TiDB, SQL statements are now either valid SQL syntax or produce a hard error.

What was a deprecation for ENCRYPT is now a hard error. Also, a more correct error message is provided ‘FUNCTION does not exist’.

ID: 17, Hash: 947fcef53a

SQL: "SELECT ENCRYPT('BaseLine',1);"

Error 1305 (42000): FUNCTION ENCRYPT does not exist

ID: 18, Hash: 364c0ffbf4

SQL: "SELECT DES_ENCRYPT('BaseLine');"

Error 1305 (42000): FUNCTION DES_ENCRYPT does not exist

In MySQL 5.7, ENCODE was deprecated and in MySQL 8.0+ it was removed. In TiDB, it is a valid function.

TiDB also produces some interesting artifacts in error messages for SQL statements not seen with MySQL. An example is Error 1235 ... has only noop implementation in tidb now .... This syntax however shows that a setting can change the status of these SQL statements.

ID: 14, Hash: dbcb4b05a2 SQL: "SELECT table_name, count(*) FROM information_schema.tables GROUP BY table_name ASC;" Error 1235 (42000): function GROUP BY expr ASC|DESC has only noop implementation in tidb now, use tidb_enable_noop_functions to enable these functions ... ID: 26, Hash: 7369c77d51 SQL: "SELECT SQL_CALC_FOUND_ROWS * FROM information_schema.schemata;" Error 1235 (42000): function SQL_CALC_FOUND_ROWS has only noop implementation in tidb now, use tidb_enable_noop_functions to enable these functions

Even during development, an interesting and unintended bug in early testing, resulted in an interesting error using TiDB.

ID: 31, Hash: 9cae50cbfc

SQL: "SELECT DATE('2024-01-01 10:00:00'); /* Example of bad data causing warning */SELECT 'abc' AS full;"

Error 8130 (HY000): client has multi-statement capability disabled. Run SET GLOBAL tidb_multi_statement_mode='ON' after you understand the security risk

Conclusion

Next BaseLine is now available in limited beta. Eliminate the uncertainty around “Will the migration work?” by performing an independent risk assessment of your product in a migrated database environment before committing to ad-hoc engineering efforts. If you’re interested in seeing a demo with your own SQL workload, you can register here.

Next BaseLine currently supports MySQL, PostgreSQL, Oracle, and SQL Server RDBMS products, covering both self-hosted and cloud-managed implementations across AWS, GCP, Azure, and Alibaba. It supports multiple MySQL- and PostgreSQL-compatible databases, including TiDB, SingleStore, Neon Serverless, Nile, ElephantSQL, TimeScale, and more. Additional compatibility is available for Snowflake, ClickHouse, and DuckDB.