At Oracle Openworld this year, Oracle OpenStack Release 2 was announced. This Kilo based distribution included some new deployment features not see in other OpenStack distros including the use of Kolla, Docker and MySQL Cluster. The press release states “First commercially available OpenStack implementation completely packaged as Docker instances”.

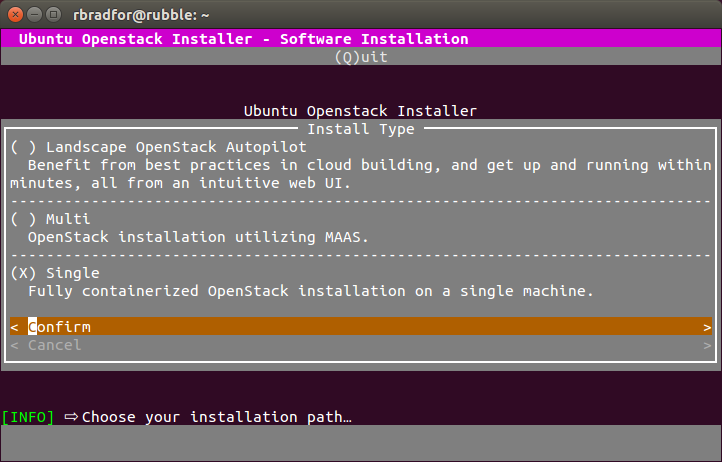

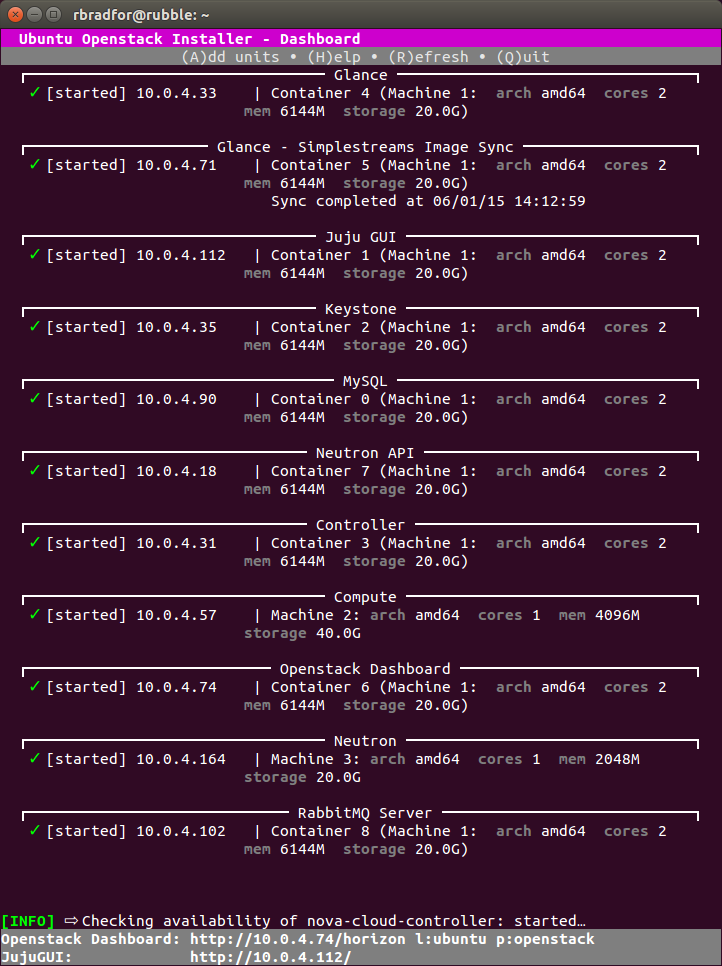

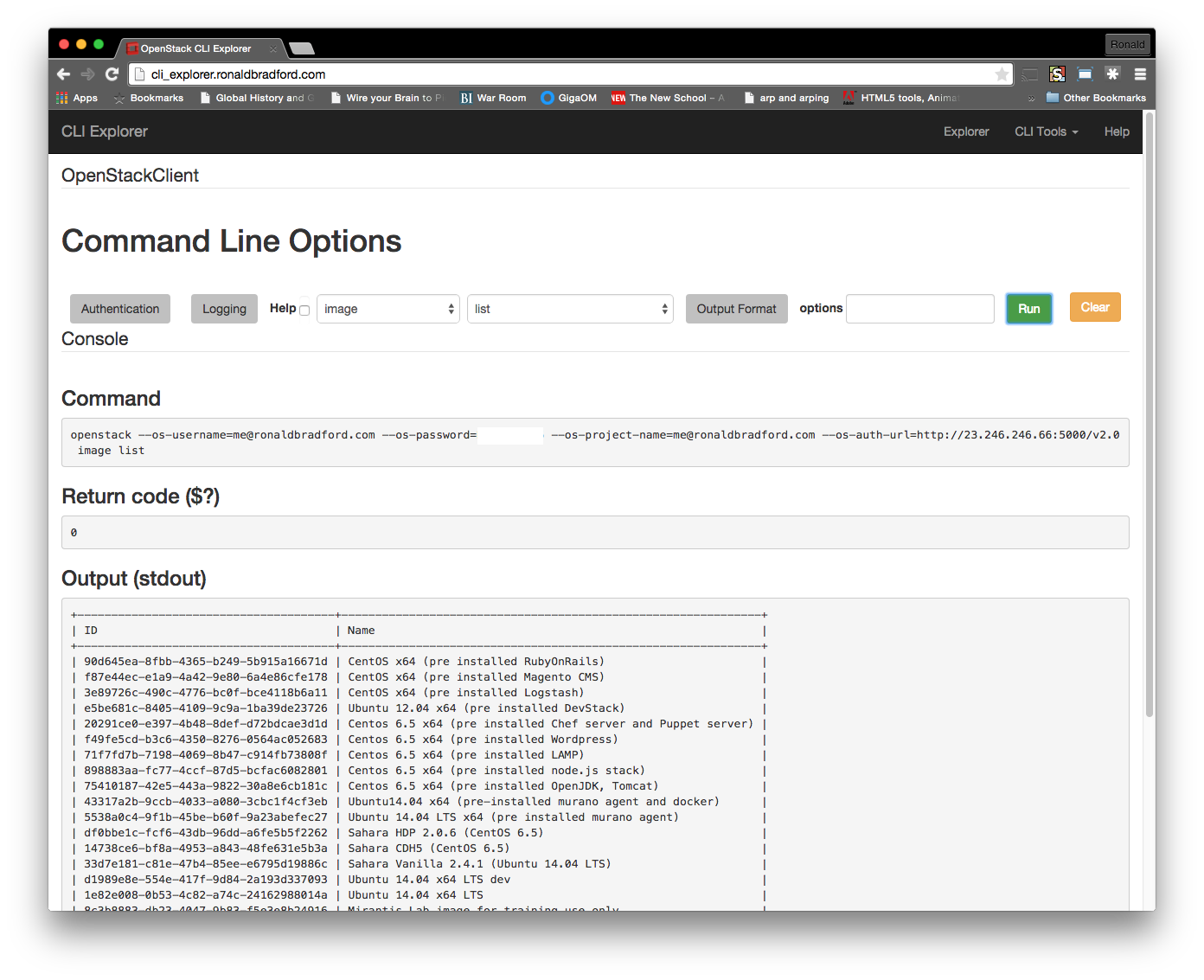

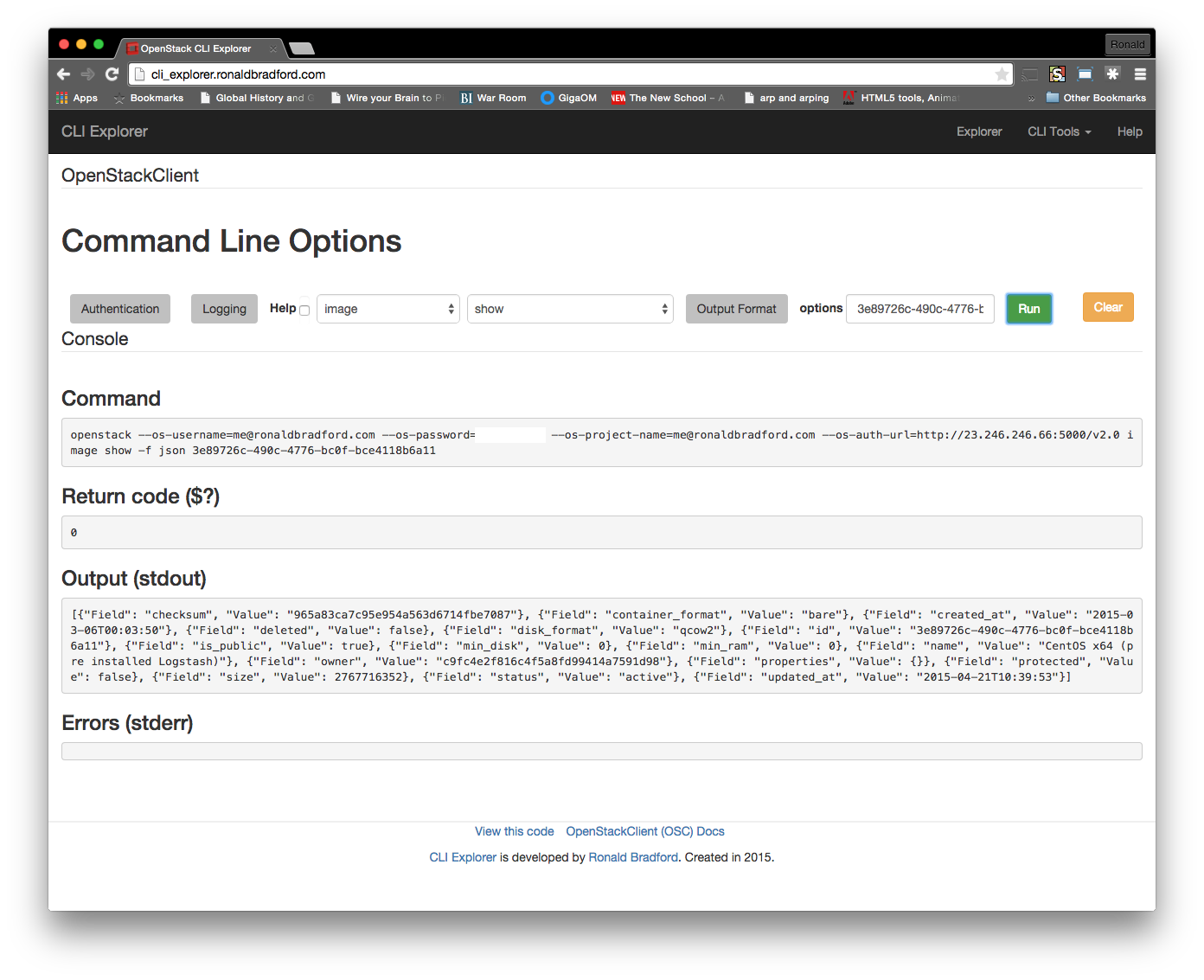

Using Docker to containerize each component of services is a very convenient means of dev/test/prod management. Your single node developer environment gets HA automatically, you can easily deploy to two or more management nodes varying services, and they look and act just like your production environment. I have often struggled with developing in OpenStack either with single project environments, creating a devstack, a previously installed 3 node physical server deployment which takes up room and power in my office, and also comparing other single node solutions including Canonical and Mirantis. I am often left to using online services such as Mirantis Express, TryStack and HP Cloud to more easily evaluate the end product, but without any access to the operating cloud under the covers.

It is an interesting move to using MySQL Cluster. I liked this announcement. This is a very robust Master/Master MySQL compatible product that starts with a High Availability implemented through a shared nothing architecture. You get the benefit of dynamic adding of data shards as your system grows. However MySQL Cluster is a very different product under the covers. For those familiar with managing MySQL server a different set of skills are required, starting with the concept of a management node, data nodes and SQL nodes and the different ways to manage, monitor and triage. MySQL Cluster is effectively an in-memory solution so this will require some additional sizing considerations especially for production deployments. Your backup/recovery/disaster recovery strategy will also change. All of this administration exists for MySQL Cluster, it is just different. If you only use MySQL server these are new skills to master. As an expert in MySQL server and having not used MySQL Cluster for at least 7 years, I cannot provide an insights for example of the use of common monitoring tools (including newer SaaS offerings). Still, MySQL Cluster is an extremely stable and production ready product, that scales to millions of QPS easily. Using this as a HA solution gives you a rock solid base which is what you need first.

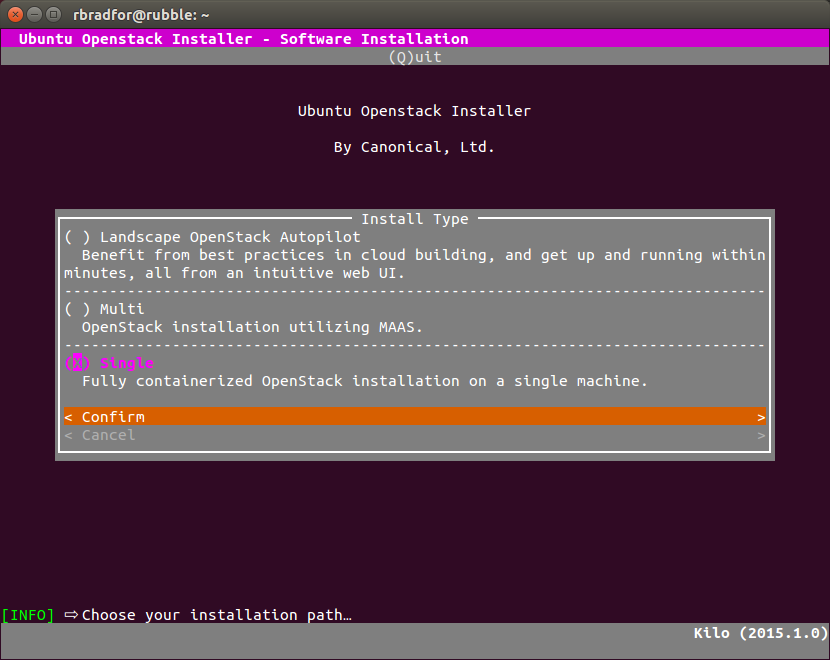

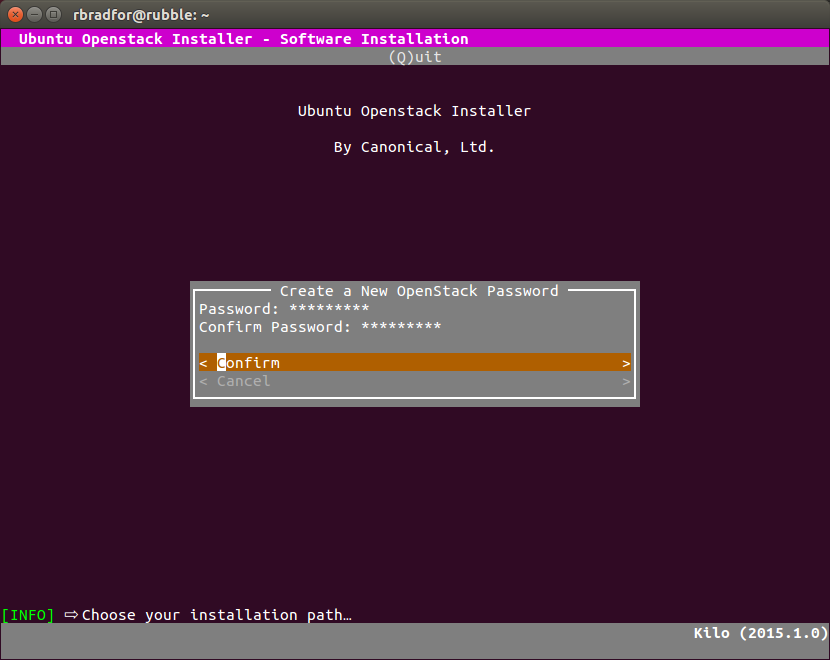

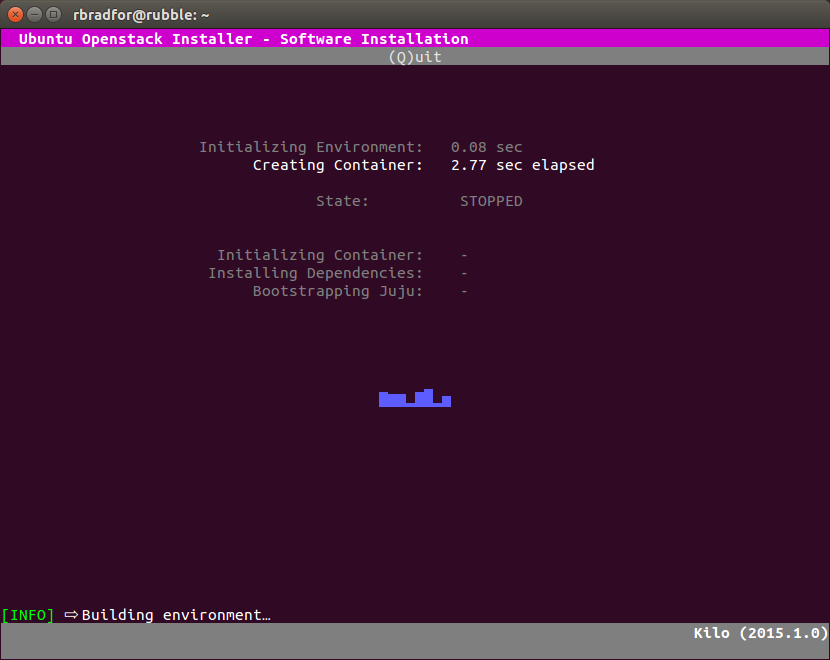

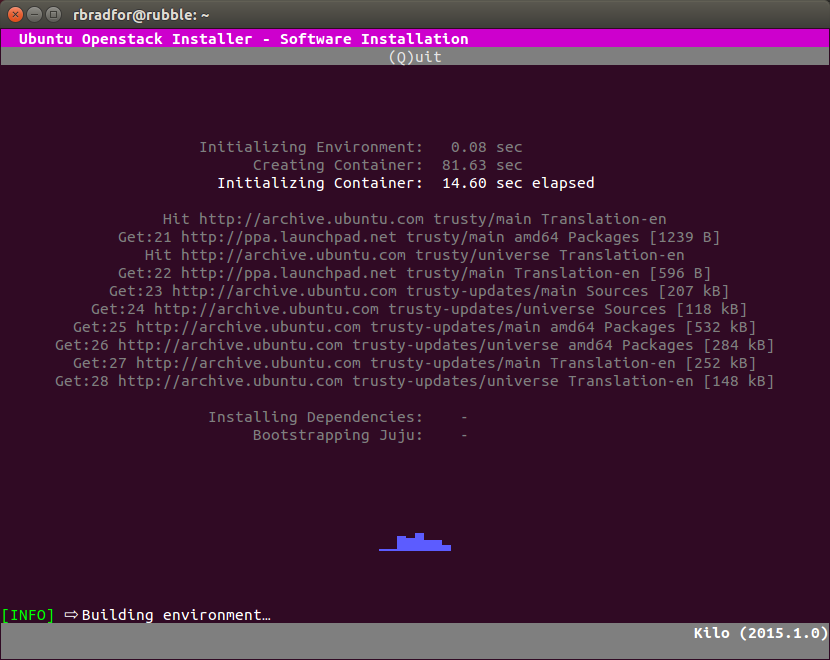

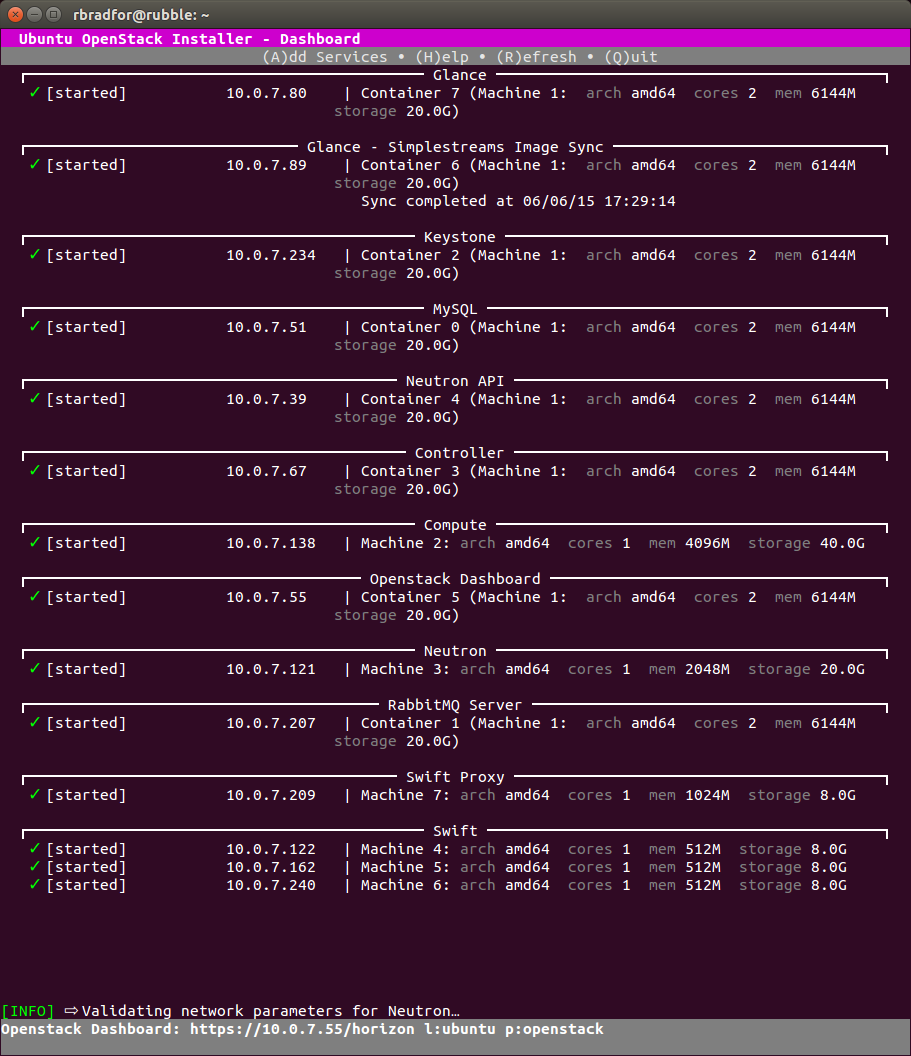

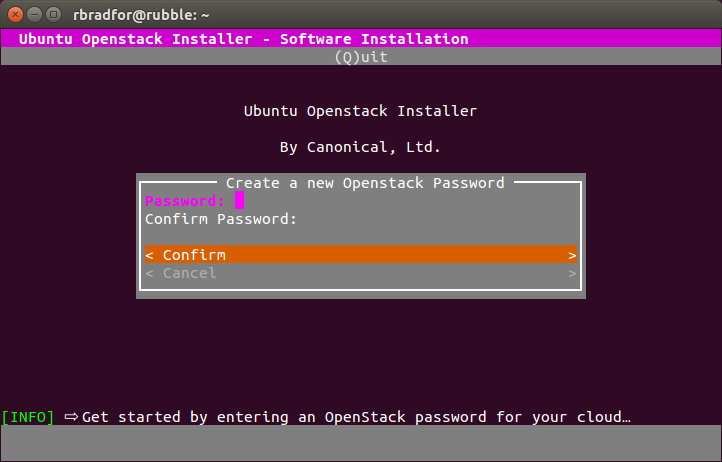

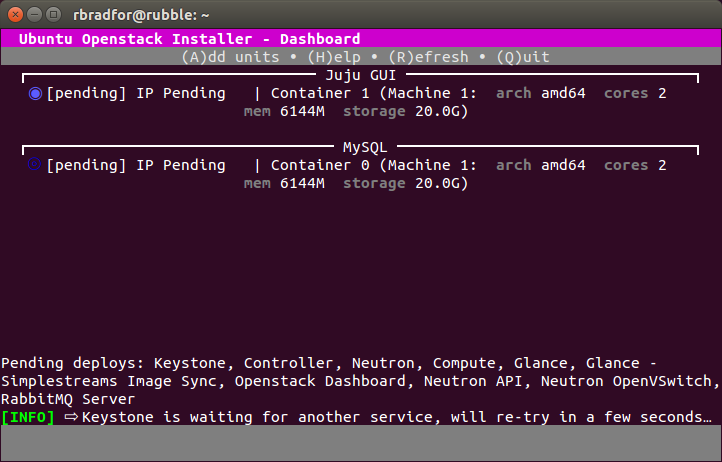

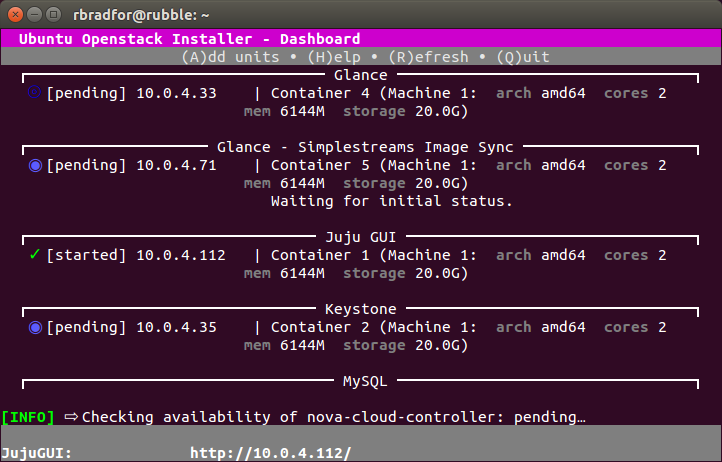

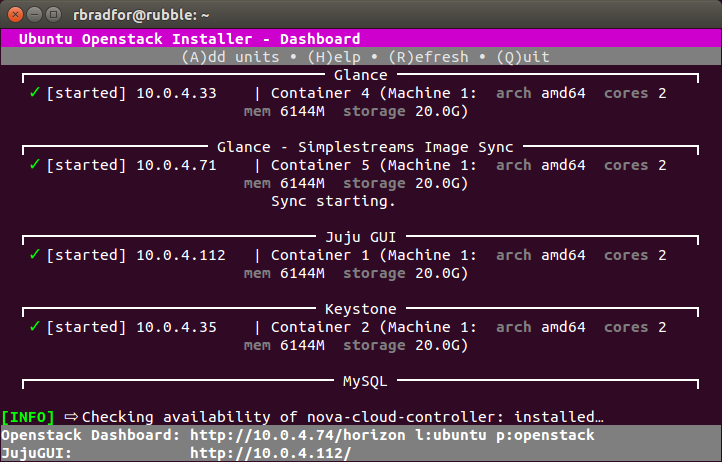

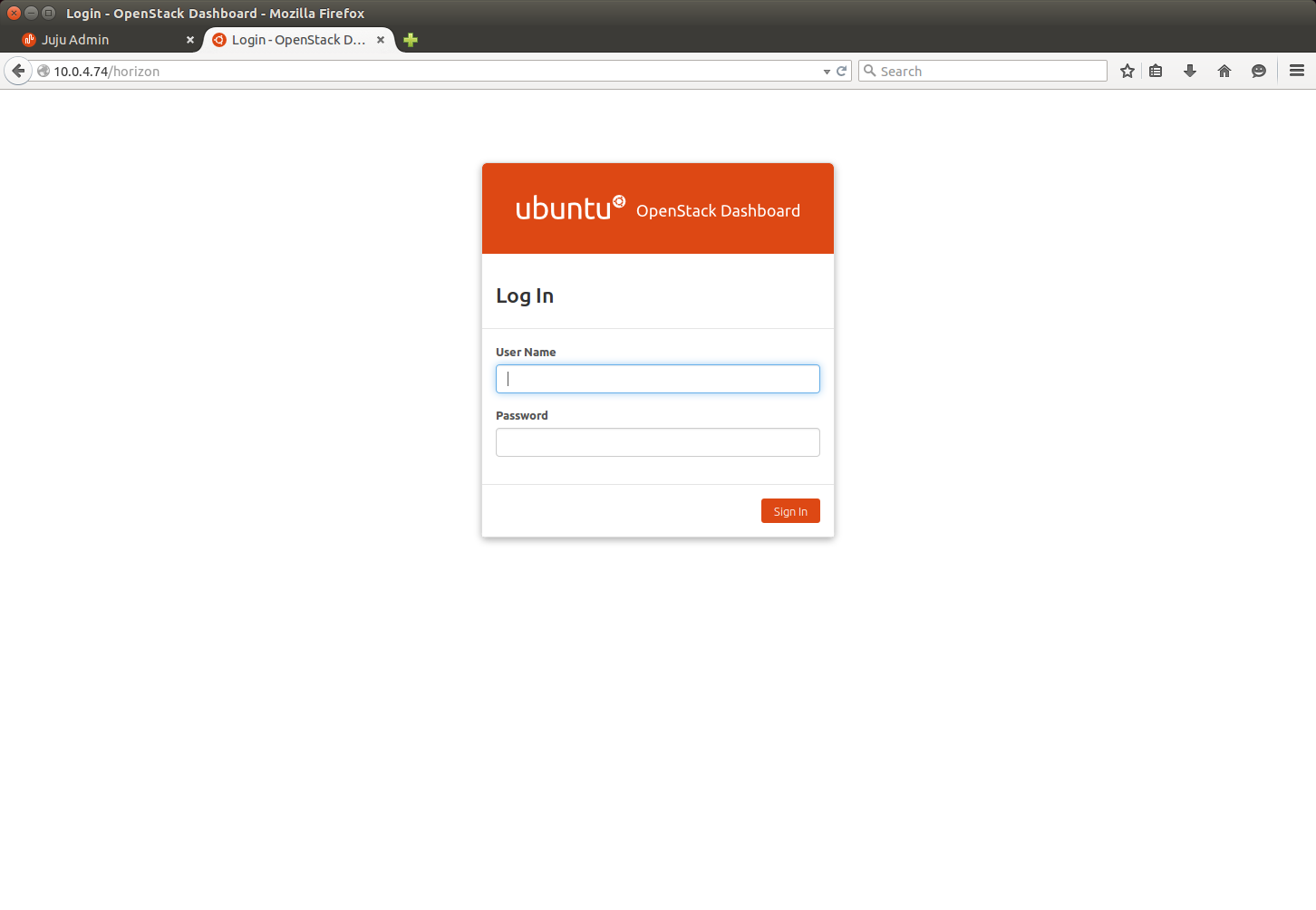

While I attended a number of sessions and took the Hands On Lab for Oracle OpenStack the proof is having a running local environment. My next post will talk about my experiences installing.