The Tiny Stack (Astro, SQLite, Litestream)

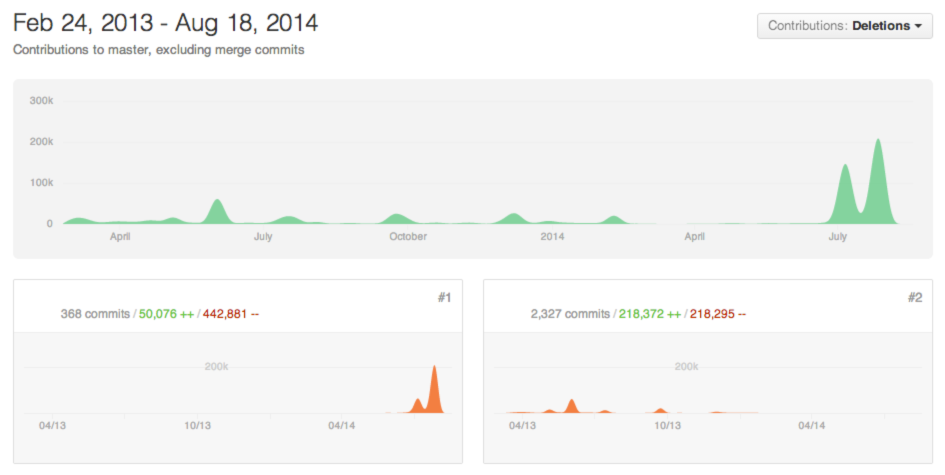

I spent many years in the LAMP stack, and there are often many more acronyms of technology stacks in our evolving programming ecosystem. New today is “The Tiny Stack”, consisting of Astro, a modern meta-framework for javascript (not my words), and Lightstream Continuously stream SQLite changes. I’ve never been a fan of Javascript, a necessary evil in modern stacks, but it changes so rapidly it’s a constant stream of new products with never the time to learn any. Lightstream is interesting. Replication of SQL operations to a database is nothing new, the Change Data Capture (CDC) of your data, however, I’d not thought of SQLite which is embedded everywhere offered this type of capability.

Amazon Aurora Introduces Long-Awaited RDS Data API to Simplify Serverless Workloads

AWS Aurora Serverless version 2 has been out for at least a year (actually 20 months – Apr 21, 2022), but a feature of version 1 that was not available in version 2 is the Data API. This is for developers without SQL skills to have a RESTful interface to the database, however, it only works in AppSync and only for recent versions of PostgreSQL and only in certain regions. I’ve never used it myself, but it is news.

Speaking of what is available in what AWS region, recently released InstanceHunt allows you to identify the instance families/types available in different regions across various AWS Database services. I developed this in just a few days and released it only last week as a working MVP. Future goals are to include other clouds and other categories of services such as Compute. The prior announcement may facilitate a future version that supports the features of services in regions.

Stop Stalling And Start Your Dream Side Business In 2024

The title kinda says it all. As an inspiring entrepreneur, my pursuits have only offered limited minimal success over the decades and never a passive revenue stream. While the article did not provide valuable nuggets, the title did. One of my goals for 2024 is to elevate my creation and release of side projects, regardless of each project being a source of revenue. I consider refining my design, development, testing, and implementation skills and providing information of value, are all resources of a soft income that showcase some of my diverse skills.

About ‘Digital Tech Trek Digest ‘

Most days I take some time early in the morning to scan my inbox newsletters, the news, LinkedIn, or other sources to read something new covering professional and personal topics of interest. Turning what I read into some actionable notes in a short committed time window is a summary of what I learned today, what I should learn and use, or what is of random interest. And thus my Digital Tech Trek.