The following outlines an approach of identifying and improving unit tests in an OpenStack project.

Obtain the source code

You can obtain a copy of current source code for an OpenStack project at http://git.openstack.org . Active projects are categorized into openstack, openstack-dev, openstack-infra and stackforge.

NOTE: While you can find OpenStack projects on GitHub , these are just a mirror of the source repositories.

In this example I am going to use the Magnum project.

$ git clone git://git.openstack.org/openstack/magnum $ cd magnum

Run the current tests

The first step should be to run the current tests to verify the current code. This is to become familiar with the habit, especially if you may have made modifications and are returning to looking at your code. This will also create a virtual environment, which you will want to use later to test your changes.

$ tox -e py27

Should this fail, you may want to ensure all OpenStack developer dependencies are inplace on your OS.

Identify unit tests to work on

You can use the code coverage of unit tests to determine possible places to start adding to existing unit tests. The following command will produce a HTML report in the /cover directory of your project.

$ tox -e cover

This output will look similar to this example coverage output for Magnum. You can also produce a text based version with:

$ coverage report -m

I will use this text version as a later verification.

Working on a specific unit test

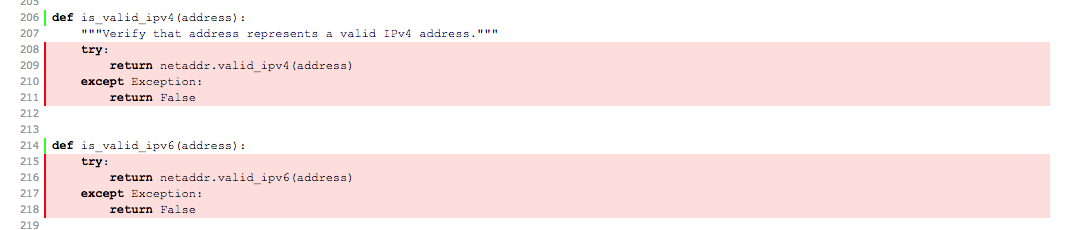

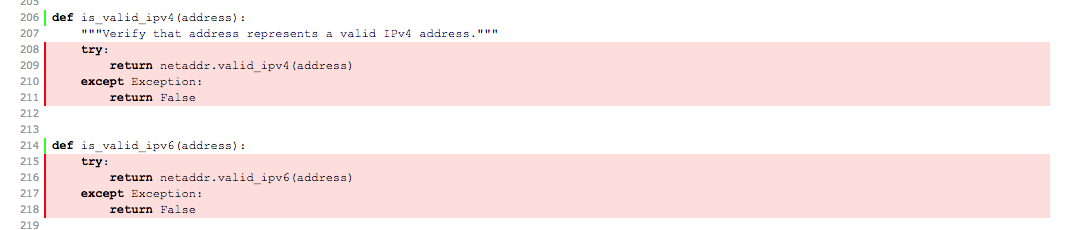

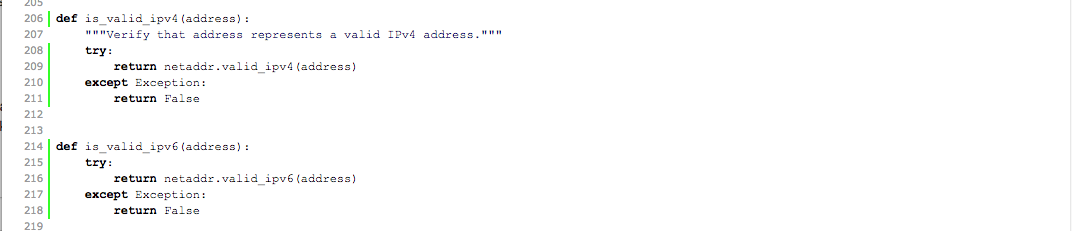

Drilling down on any individual test file you will get an indication of code that does not have unit test coverage. For example in magnum/common/utils :

Once you have found a place to work with and you have identified the corresponding unit test file in the magnum/tests/unit sub-directory, in this example I am working on on magnum/tests/unit/common/test_utils.py, you will want to run this individual unit test in the virtual environment you previously created.

$ source .tox/py27/bin/activate $ testr run test_utils -- -f

You can now start working on making your changes in whatever editor you wish. You may want to also work interactively in python initially to test and verify classes and methods especially if you are unfamiliar with how the code functions. For example, using the identical import found in test_utils.py for the test coverage I started with these simple checks.

(py27)$ python

Python 2.7.6 (default, Mar 22 2014, 22:59:56)

[GCC 4.8.2] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> from magnum.common import utils

>>> utils.is_valid_ipv4('10.0.0.1') == True

True

>>> utils.is_valid_ipv4('') == False

True

I then created some appropriate unit tests for these two methods based on this interactive validation. These tests show that I not only test for valid values, I also test various boundary contains for invalid values including blank, character and out of range values of IP addresses.

def test_valid_ipv4(self):

self.assertTrue(utils.is_valid_ipv4('10.0.0.1'))

self.assertTrue(utils.is_valid_ipv4('255.255.255.255'))

def test_invalid_ipv4(self):

self.assertFalse(utils.is_valid_ipv4(''))

self.assertFalse(utils.is_valid_ipv4('x.x.x.x'))

self.assertFalse(utils.is_valid_ipv4('256.256.256.256'))

self.assertFalse(utils.is_valid_ipv4(

'AA42:0000:0000:0000:0202:B3FF:FE1E:8329'))

def test_valid_ipv6(self):

self.assertTrue(utils.is_valid_ipv6(

'AA42:0000:0000:0000:0202:B3FF:FE1E:8329'))

self.assertTrue(utils.is_valid_ipv6(

'AA42::0202:B3FF:FE1E:8329'))

def test_invalid_ipv6(self):

self.assertFalse(utils.is_valid_ipv6(''))

self.assertFalse(utils.is_valid_ipv6('10.0.0.1'))

self.assertFalse(utils.is_valid_ipv6('AA42::0202:B3FF:FE1E:'))

After making these changes you want to run and verify your modified test works as previously demonstrated.

$ testr run test_utils -- -f

running=OS_STDOUT_CAPTURE=${OS_STDOUT_CAPTURE:-1} \

OS_STDERR_CAPTURE=${OS_STDERR_CAPTURE:-1} \

OS_TEST_TIMEOUT=${OS_TEST_TIMEOUT:-160} \

${PYTHON:-python} -m subunit.run discover -t ./ ${OS_TEST_PATH:-./magnum/tests/unit} --list -f

running=OS_STDOUT_CAPTURE=${OS_STDOUT_CAPTURE:-1} \

OS_STDERR_CAPTURE=${OS_STDERR_CAPTURE:-1} \

OS_TEST_TIMEOUT=${OS_TEST_TIMEOUT:-160} \

${PYTHON:-python} -m subunit.run discover -t ./ ${OS_TEST_PATH:-./magnum/tests/unit} --load-list /tmp/tmpDMP50r -f

Ran 59 (+1) tests in 0.824s (-0.016s)

PASSED (id=19)

If your tests fail you will see a FAILED message like. I find it useful to write a failing test intentionally just to validate the actual testing process is working.

${PYTHON:-python} -m subunit.run discover -t ./ ${OS_TEST_PATH:-./magnum/tests/unit} --load-list /tmp/tmpsZlk3i -f

======================================================================

FAIL: magnum.tests.unit.common.test_utils.UtilsTestCase.test_invalid_ipv6

tags: worker-0

----------------------------------------------------------------------

Empty attachments:

stderr

stdout

Traceback (most recent call last):

File "magnum/tests/unit/common/test_utils.py", line 98, in test_invalid_ipv6

self.assertFalse(utils.is_valid_ipv6('AA42::0202:B3FF:FE1E:832'))

File "/home/rbradfor/os/openstack/magnum/.tox/py27/local/lib/python2.7/site-packages/unittest2/case.py", line 672, in assertFalse

raise self.failureException(msg)

AssertionError: True is not false

Ran 55 (-4) tests in 0.805s (-0.017s)

FAILED (id=20, failures=1 (+1))

Confirming your new unit tests

You can verify this has improved coverage percentage by re-running the coverage commands. I use the text based version as an easy way to see a decrease in the number of lines not covered.

Before

$ coverage report -m | grep "common/utils" magnum/common/utils 273 94 76 38 62% 92-94, 105-134, 151-157, 208-211, 215-218, 241-259, 267-270, 275-279, 325, 349-384, 442, 449-453, 458-459, 467, 517-518, 530-531, 544

$ tox -e cover

After

$ coverage report -m | grep "common/utils" magnum/common/utils 273 86 76 38 64% 92-94, 105-134, 151-157, 241-259, 267-270, 275-279, 325, 349-384, 442, 449-453, 458-459, 467, 517-518, 530-531, 544

I can see **8 lines of improvement which I can also verify if I look at the html version.

Before

After

Additional Testing

Make sure you run a full test before committing. This runs all tests in multiple Python versions and runs the PEP8 code style validation for your modified unit tests.

$ tox -e py27

Here are some examples of PEP8 problems with my improvements to the unit tests.

pep8 runtests: commands[0] | flake8 ./magnum/tests/unit/common/test_utils.py:88:80: E501 line too long (88 > 79 characters) ./magnum/tests/unit/common/test_utils.py:91:80: E501 line too long (87 > 79 characters) ... ./magnum/tests/unit/common/test_utils.py:112:32: E231 missing whitespace after ',' ./magnum/tests/unit/common/test_utils.py:113:32: E231 missing whitespace after ',' ./magnum/tests/unit/common/test_utils.py:121:30: E231 missing whitespace after ',' ...

Submitting your work

In order for your time and effort to be included in the OpenStack project there are a number of key details you need to follow that I outlined in contributing to OpenStack . Specifically these documents are important.

You do not have to be familiar with the procedures in order to look at the code, and even look at improving the code. You will need to follow the steps as outlined in these links if you want to contribute your code. Remember if you are new, the best access to help is to jump onto the IRC channel of the project you are interested in.

This example along with additions for several other methods was submitted (See patch ). It was reviewed and ultimately approved.

References

Some additional information about the tools and processes can be found in these OpenStack documentation and wiki pages.