Well, it’s Sunday night so I will put this down to being the weekend. The background to being caught out is a request I made to my local Users Group mailing list for some information on people’s environments because I wanted to some empirical data analysis without having any more knowledge of the systems.

In summary (without the surrounding fan-fare, I was seeking):

SELECT VERSION(); SHOW STATUS; SHOW VARIABLES; // Optional

I was however perplexed why my first data point analysis (Read/Write ratio) using the Status values Com_insert, Com_update, Com_delete and Com_select was not always giving me expected results. In particular, a number of server results showed 0 for values while I knew the results came from working MySQL environments.

So, sanity check with good friend Morgan and I get the response to answer the dilemma SHOW STATUS defaults to session based statistics in 5.0+, there’s a million people it’s caught out, and many bugs about “xyz variable not being incremented”.

Well, it’s Sunday night so I will put this down to being the weekend. The background to being caught out is a request I made to my local Users Group mailing list for some information on people’s environments because I wanted to some empirical data analysis without having any more knowledge of the systems.

So I’m really seeking:

SELECT VERSION(); SHOW GLOBAL STATUS; SHOW GLOBAL VARIABLES; // Optional

Well, that’s my MySQL trivia point for the day. MySQL between version 4.1 and 5.0 made a major change with the default value. The MySQL 5.0 Manual also states this.

UPDATE 23 Sep 2006

Following Eric’s Show @&!# status again!, I did then read the Bug #19093, and it was great to see Monty comment (that’s open source for you, enabling even founders to mix with the common folk), and I’d tend to agree to his comments. MySQL has attempted to correct something.

Perhaps the best compromise to the problem is to deprecate and drop the SHOW STATUS command, and make the scope component GLOBAL|SESSION compulsory, so SHOW GLOBAL STATUS or SHOW SESSION STATUS are the only valid commands!

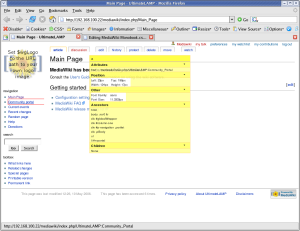

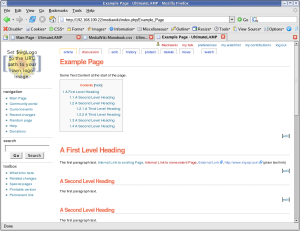

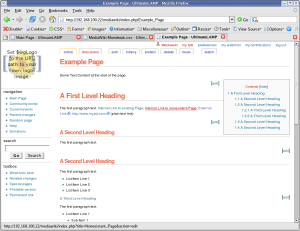

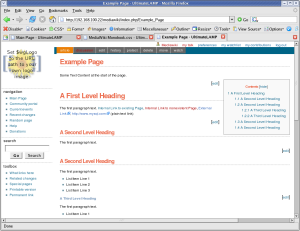

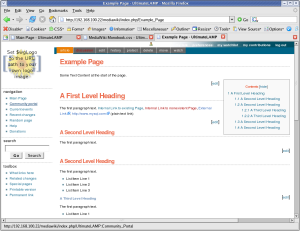

I’ve been using VNCViewer from

I’ve been using VNCViewer from